How to manage data? This is a question that’s baffles us each and every time, whenever we look at data.

The real challenge is not about managing data, but how to synchronize processes to expose the issues with data. Today’s marketers may have a tough time tackling these challenges. Even more for non-tech-savvy marketers, they may be feel a bit overwhelmed, but we’ve a solution – R programming language is capable of performing specific tasks while preparing data for machine learning models or advanced analytics.

Basics of R

R programming is a popular open source language ideal for smart data visualization and statistical modeling. Generally, it functions through a terminal on a laptop, but you can also enjoy development environment software that makes R quite user-friendly.

One of the most sought after Integrated Development Environment (IDE) is RStudio – it’s very popular amongst practitioners mostly owing to its quad-window view, which let users view their results in the terminal beside the whiteboard platform.

Exploring Data with R

Data importing is the starting point of analyzing data. Fortunately, a more than sufficient number of R programming libraries exist today that are up to interface with a database or an API. Some of these libraries are: twitteR, RMongo and Jsonlite. A quick search across Comprehensive R Archive Network will help you find them.

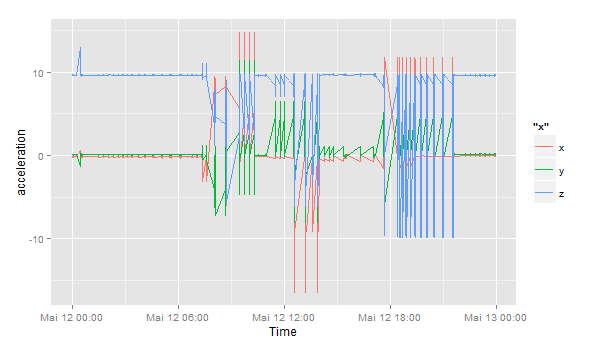

Next, you have to turn your attention to data wrangling. It’s the method of mapping one row format to another, while amalgamating, dividing and rearranging rows and columns. Map out the metrics after ascertaining whether a task falls under one of the following mathematical categories:

- Discrete Metrics

- Continuous Metrics

Another significant step is corroborating the columns decided: are headers from the data source given? R Programming helps add headers on data as soon as data is imported. Furthermore, another question that pops up here is that are the headers from the same labels of parties who have access to data? Now, this question is instrumental in answering whether there is any more efficient way to have access to data consecutively without manually rectifying columns before placing the data in a model.

For R programming, some of the basic libraries to consider are as follows:

Readr – It helps estimate functions and read data in rectangular tabular formats

Tidyr – It helps in organizing missing field values and arranging tabular data in an effective and compatible structure

Dplyr – Ideal for transforming data after it’s added in R

Marketing Knowledge Is Still an Add-On Factor

Lastly, marketers should never ignore their domain knowledge, while modeling data. At times, your experience will help you tackle an outlier for a model in the best way possible. Or else, you might ask your technical team to adjust and manage data in cloud in a situation where other teams try to downstream assess data.

Thus, a relevant marketing knowledge is essential. It will help decide which data to be queried or how to parse it well.

If you are thinking of learning a popular yet effective programming language to tame your data, R Programming certification in Delhi NCR is the best solution for you. A good R programming training will help you understand and evaluate data like a pro.

The blog first appeared on ― www.cmswire.com/digital-marketing/how-marketers-can-plan-data-mining-with-r-programming

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.