Python is an extremely readable and versatile high-level programming language. Many companies such as Google, YouTube, Dropbox use the language for developing applications. It also finds its use extensively in diverse fields as in Python for data analysis, Machine Learning Using Python, Natural Language Processing, Web Development, Scientific Computing, Image processing, Robotics, Computer Vision and many more.

It supports both Object-oriented programming and Functional programming. Python is generally referred to as an interpreted language which implies that each line of code is executed one by one and if the interpreter finds an error, it stops immediately with an error message on the screen.

Another important feature of Python is its interactive prompt. A Python statement can be typed and immediately executed, which is in sharp contradiction to any other compiled language.

What are Python 2.x and Python 3.x?

There are two main versions of Python: Python 2.x and Python 3.x. If someone is new to Python, then he/she might be in confusion about which version to use. However, in the current scenario, we can easily migrate from Python 2 to Python 3, as the Python Software Foundation has finally taken the step to formally announce that Python 2 will reach the end of life (EOL) on January 1st, 2020.

Key differences between Python 2.x and Python 3.x

This article discusses the differences between these two versions of Python, making Python 3 less confusing for a new programmer.

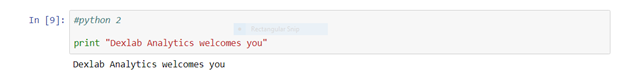

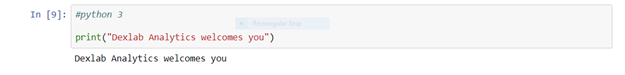

Print Function

In Python 2, print is a statement. There is no need of parenthesis.

In Python 3, print is a function. It needs parenthesis.

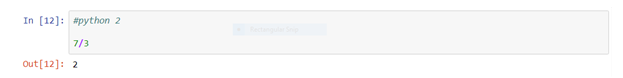

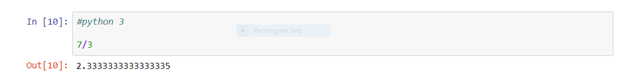

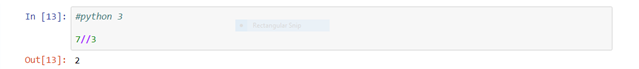

Integer Division

In Python 2, if the division operator is performed on two integers, then the output will be an integer for example: – 7/3 = 2.

In Python 3, if the division operator is performed on two integers, then the output will be accurate. It can also be in float for example: – 7/3 = 2.33.

To get the result in an integer only a different division operator is used that is (//) it returns an integer result for example, – 7//3 = 2.

3. Unicode Support

Both the versions of Python can handle strings (sequences of characters) differently.

Python 2 uses the ASCII encoding standard by default. ASCII is limited to representing 256 characters. This limits the flexibility of Python to encode the characters, particularly non-standard ones. Using Unicode in Python 2 requires extra syntax—for example when using print, the input text is to be wrapped in the Unicode() function to handle special characters.

In Python 3, Unicode is the default. The Unicode standard is much more versatile—it supports over 128,000 characters. There is no need for an extra syntax to define the Unicode values—they get printed automatically as utf-8 strings.

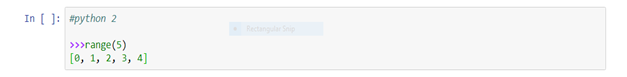

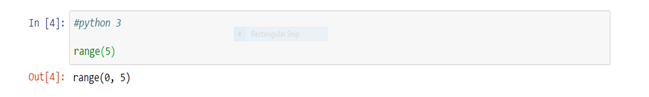

Range Function

In Python 2, the range function returns a list of numbers.

In Python 2, the xrange class represents an iterable that provides the same object.

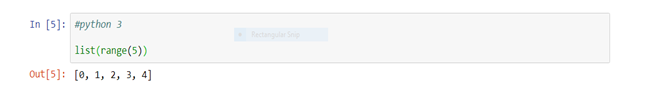

In Python 3, original range function is removed and xrange is renamed to range:

In Python 3, it is needed to convert the range object to a list if someone desires the same result as the range function provides in Python 2.

Input() Method

Mainly what is expected from the input() method is that it reads input as string, then it can be converted into any datatype as per the requirement.

In Python 2, it has both the input() and raw_input() methods for taking input. The difference between the raw_input() and input()is that the raw_input() reads input as a string while the input() reads input as string only if it is inside quotes else reads as an integer.

In Python 3, there is no raw_input() method. The raw_input() method is replaced by input() in python 3.

If someone still wants to use the input() method like in python 2, then it can be availed by using eval() method.

There are many other differences between Python 2 and Python 3 like: –

Next() Method

In Python 2, .next() method is used and in Python 3 next() function is used to iterate the next element of an iterator.

Raising Exception

To raise an exception in Python 3, the argument should be in parenthesis, while in Python 2, it is not necessary.

Handling Exception

Handling exception is also changed in Python 3, “as” keyword is used in Python 3, while it is not necessary in Python 2.

So, if someone is a beginner, then it is strongly recommended to use Python 3 because it is the future of Python and also January 1, 2020, will be the last day of Python 2. It means that no improvement will be done anymore after that day, even if someone finds a security problem in it.

It is highly recommended to upgrade the version of the programming language to Python 3. Some ways can help the Python 2 users in porting their code from Python 2 to Python 3 and get the feel of Python 3 and figure out how it is different from Python 2. The code can be imported by using tools like “Futurize” and “Modernize”. Also, if someone wants to check the availability of Python 3 as part of his tests, then “caniusepython3.check()” can be used.

As a final note, everyone must look for upgrading their Python version to Python 3 to understand the subtleties of the new version and usher in the future. However, if you are interested in Deep learning for computer vision with Python and similar courses, then opt for the premium Python training institute in Delhi now!

.