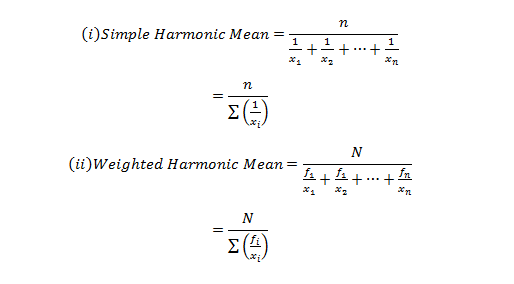

Harmonic mean, for a set of observations is the number of observations divided by the sum of the reciprocals of the values and it cannot be defined if some of the values are zero.

This blog is in continuation with STATISTICAL APPLICATION IN R & PYTHON: CHAPTER 1 – MEASURE OF CENTRAL TENDENCY. However, here we will discover Harmonic mean and its application using Python and R.

Application:

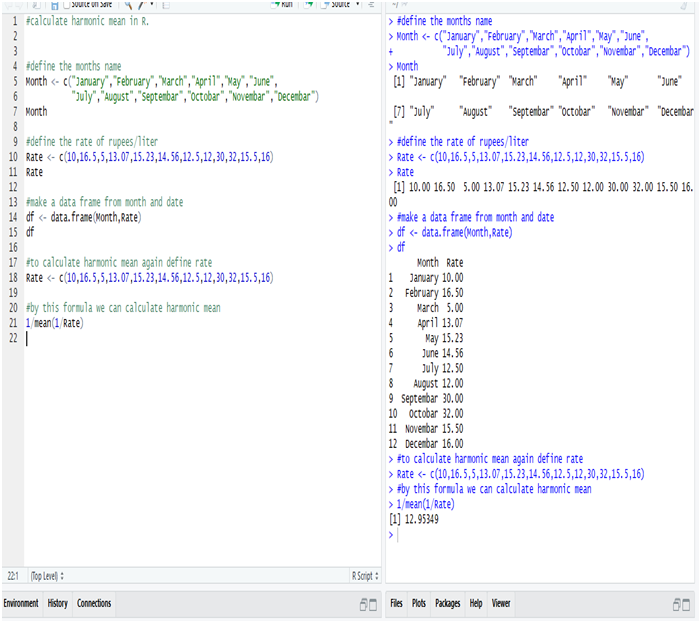

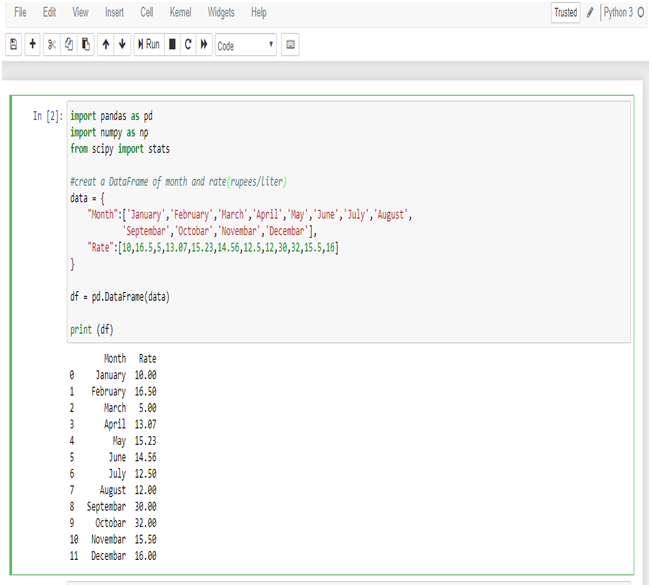

A milk company sold milk at the rates of 10,16.5,5,13.07,15.23,14.56,12.5,12,30,32, 15.5, 16 rupees per liter in twelve different months (January-December), If an equal amount of money is spent on milk by a family in the ten months. Calculate the average price in rupees per month.

Table for the problem:

Month | Rates (Rupees/Liter) |

January | 10 |

February | 16.5 |

March | 5 |

April | 13.07 |

May | 15.23 |

June | 14.56 |

July | 12.5 |

August | 12 |

| September | 30 |

| October | 32 |

| November | 15.5 |

| December | 16 |

Calculate Harmonic Mean in R:-

So, the average rate of the milk in rupees/liter is 12.95349 = 13 Rs/liter (Approx)

We get this answer from the Harmonic Mean, calculated in R.

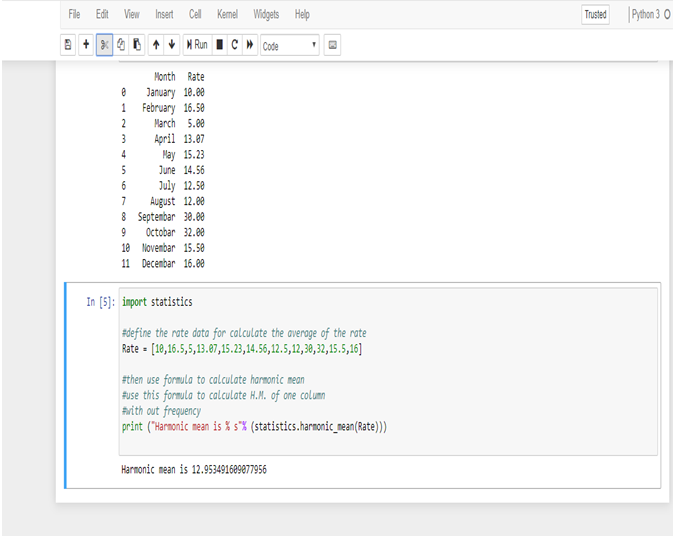

Calculate Harmonic Mean in Python:-

First, make a data frame of the available data in Python.

Now, calculate the Harmonic mean from the following data frame.

So, the average rate of the milk in rupees/liter is 12.953491609077956 = 13 Rs/Liter (Approx)

We get this answer from Harmonic mean, calculated in Python.

Summing it Up:

In this data, we have a few large values which are putting an effect on the average value, if we calculate the average in Arithmetic mean, but in Harmonic mean, we get a perfect average from the data, and also for calculating the average rate.

Use of Harmonic mean is very limited. Harmonic mean gives the largest value to the smallest item and smallest value to the largest item.

Where there are a few extremely large or small values, Harmonic mean is preferable to Arithmetic mean as an average.

The Harmonic mean is mainly useful in averages involving time, rate & price.

Note – If you want to learn the calculation of Geometric Mean, you can check our post on CALCULATING GEOMETRIC MEAN USING R AND PYTHON.

Dexlab Analytics is a peerless institute for Python Certification Training in Delhi. Therefore, for tailor-made courses in Python, Deep Learning, Machine Learning, Neural Networks, reach us ASAP!

You can even follow us on Social Media. We are available both in Facebook and Instagram.

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.