It is no new news that Artificial Intelligence can now control self driving cars; they can beat the best humans at highly challenging board games like chess, and even fight cancer. But still one thing it cannot do perfectly is communicate.

So, to help solve this problem Google has been feeding its Artificial Intelligence with more than 11,000 unpublished books, which include more than 3000 steamy romantic titles. And in response the AI has penned down its own version of mournful poems.

The poems read something like this:

I went o the store to buy some groceries.

I store to buy some groceries.

I were to buy any groceries.

Horses are to buy any groceries.

Horses are to buy any animal.

Horses the favourite any animal.

Horses the favourite favourite animal.

Horses are my favourite animal.

And here is another one from Google’s AI:

he said.

“no,” he said.

“no,” i said.

“i know,” she said.

“thank you,” she said.

“come with me,” she said.

“talk to me,” she said.

“don’t worry about it,” she said.

The way this happened was, Google’s team fed their AI with unpublished works into a neural network and gave the system two sentences from the book; it was then up to this ingenious artificial intelligence to build its own poetry based on available information.

In example above, the team of researchers gave their AI two sentences one about buying some groceries and the other one about horses being a favourite animal (these are the first and the last lines of the above mentioned passages). The team then directed the artificial intelligence to morph between the two sentences.

In the research paper the team further went on to explain the AI system was able to “create coherent and diverse sentences through purely continuous sampling”.

With the use of an autoanecdoter, which is a type of AI network that makes use of data sets to reproduce a result, in this case that was writing sentences, using much fewer steps the team was able to produce these sentences.

The main principle behind this research is to create an Artificial Intelligence which will be proficient in communicating via “natural language sentences”.

This research holds the possibilities of developing a system that is capable of communicating in a more human-like manner. Such a breakthrough is essential in the creation of more useful and responsive chat bots and Artificial Intelligence powered personal assistants like that of Siri and Google Now.

In a similar project, the researchers at Google have been teaching an AI how to understand language by replicating and predicting the work of bygone authors and poets under their project Gutenberg.

This standalone team at Google fed the AI with an input sentence and then asked it to predict what should come next. And by analysing the text, the AI was capable of identifying what author was likely to have written the sentence and was able to emulate his style.

In another incident, on June, 2015 another team of talented researchers at Google were able to create a chatbot that even threatened its creators. The AI learned the art of conversation by analysis of a million movie scripts thereby allowing it to realize and muse on the meaning of life, the colour of blood, and even on deeper subjects like mortality; Ss, much so that the bot could even get angry on its human inquisitor. When the bot was asked with a puzzling philosophical question about what is the meaning of life, it replied by saying – “to live forever”.

In other such similar works, Facebook has also been teaching its artificial intelligence with the use of children’s books. As per the New Scientist which is a social network, it has been using novels such as The Jungle Book, Alice in Wonderland and Peter Pan.

If you are yearning for some more of AI’s written word, then here are the rest of Google AI’s poems.

You’re right.

“All right.

You’re right.

Okay, fine.

“Okay, fine.

Yes, right here.

No, not right now.

“No, not right now.

“Talk to me right now.

Please talk to me right now.

I’ll talk to you right now.

“I’ll talk to you right now.

“You need to talk to me now. —

Amazing, isn’t it?

So, what is it?

It hurts, isn’t it?

Why would you do that?

“You can do it.

“I can do it.

I can’t do it.

“I can do it.

“Don’t do it.

“I can do it.

I couldn’t do it. —

There is no one else in the world.

There is no one else in sight.

They were the only ones who mattered.

They were the only ones left.

He had to be with me.

She had to be with him.

I had to do this.

I wanted to kill him.

I started to cry.

I turned to him. —

I don’t like it, he said.

I waited for what had happened.

It was almost thirty years ago.

It was over thirty years ago.

That was six years ago.

He had died two years ago.

Ten, thirty years ago. — “it’s all right here.

“Everything is all right here.

“It’s all right here.

It’s all right here.

We are all right here.

Come here in five minutes.

“But you need to talk to me now.

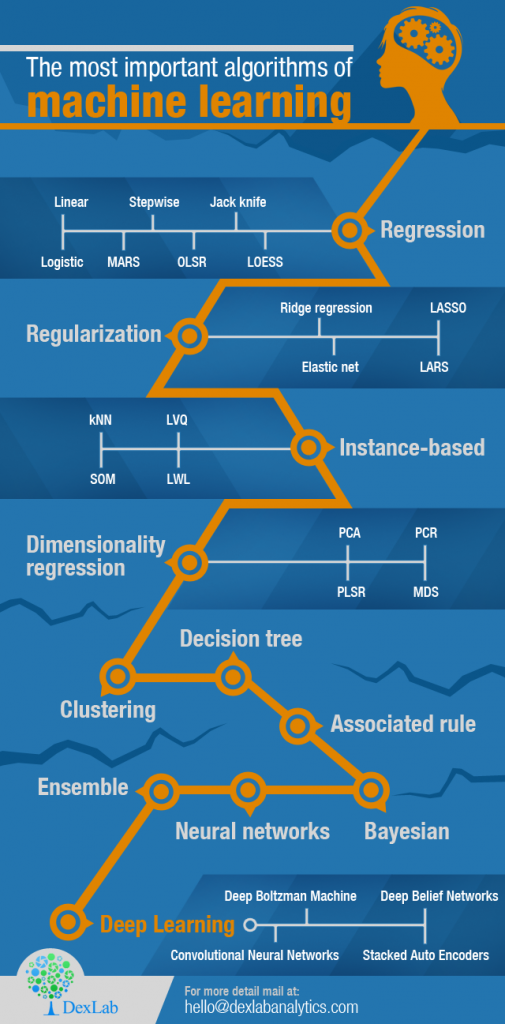

To feed in adequate information on Machine Learning Using Python, reach us at DexLab Analytics. Our Machine Learning Certification is garnering a lot of attention owing to its program-centric course module.

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.

To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.