Computer vision is the field of computer science that focuses on replicating parts of the complexity of the human vision system and enabling computers to identify and process objects in images and videos in the same way that humans do. With computer vision, our computer can extract, analyze and understand useful information from an individual image or a sequence of images. Computer vision is a field of artificial intelligence that works on enabling computers to see, identify and process images in the same way that human vision does, and then provide the appropriate output.

Initially computer vision only worked in limited capacity but due to advance innovations in deep learning and neural networks, the field has been able to take great leaps in recent years and has been able to surpass humans in some tasks related to detecting and labeling objects.

The Contribution of Deep Learning in Computer Vision

While there are still significant obstacles in the path of human-quality computer vision, Deep Learning systems have made significant progress in dealing with some of the relevant sub-tasks. The reason for this success is partly based on the additional responsibility assigned to deep learning systems.

It is reasonable to say that the biggest difference with deep learning systems is that they no longer need to be programmed to specifically look for features. Rather than searching for specific features by way of a carefully programmed algorithm, the neural networks inside deep learning systems are trained. For example, if cars in an image keep being misclassified as motorcycles then you don’t fine-tune parameters or re-write the algorithm. Instead, you continue training until the system gets it right.

With the increased computational power offered by modern-day deep learning systems, there is steady and noticeable progress towards the point where a computer will be able to recognize and react to everything that it sees.

Application of Computer Vision

The field of Computer Vision is too expansive to cover in depth. The techniques of computer vision can help a computer to extract, analyze, and understand useful information from a single or a sequence of images. There are many advanced techniques like style transfer, colorization, action recognition, 3D objects, human pose estimation, and much more but in this article we will only focus on the commonly used techniques of computer vision. These techniques are: –

- Image Classification

- Image Classification with Localization

- Object Segmentation

- Object Detection

So in this article we will go through all the above techniques of computer vision and we will also see how deep learning is used for the various techniques of computer vision in detail. To avoid confusion we will distribute this article in a series of multiple blogs. In first blog we will see the first technique of computer vision which is Image Classification and we will also explore that how deep learning is used in Image Classification.

Image Classification

Image classification is the process of predicting a specific class, or label, for something that is defined by a set of data points. Image classification is a subset of the classification problem, where an entire image is assigned a label. Perhaps a picture will be classified as a daytime or nighttime shot. Or, in a similar way, images of cars and motorcycles will be automatically placed into their own groups.

There are countless categories, or classes, in which a specific image can be classified. Consider a manual process where images are compared and similar ones are grouped according to like-characteristics, but without necessarily knowing in advance what you are looking for. Obviously, this is an onerous task. To make it even more so, assume that the set of images numbers in the hundreds of thousands. It becomes readily apparent that an automatic system is needed in order to do this quickly and efficiently.

There are many image classification tasks that involve photographs of objects. Two popular examples include the CIFAR-10 and CIFAR-100 datasets that have photographs to be classified into 10 and 100 classes respectively.

Deep learning for Image Classification

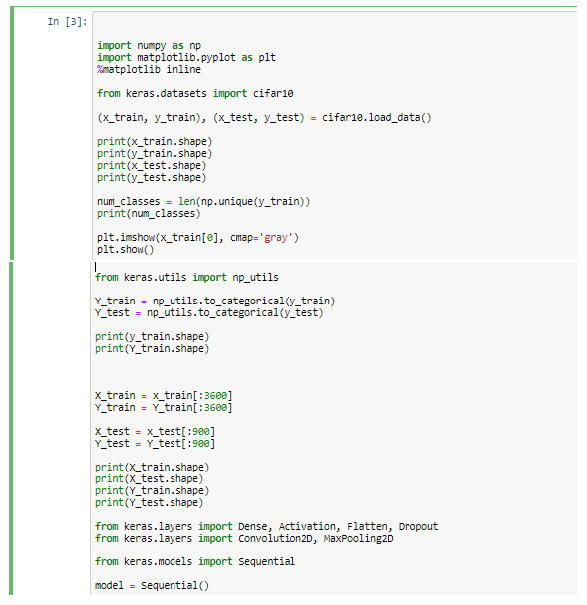

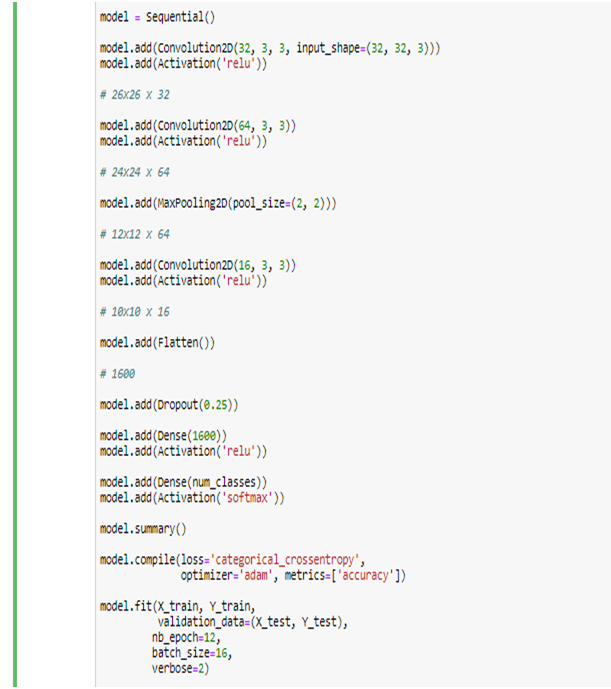

The deep learning architecture for image classification generally includes convolutional layers, making it a convolutional neural network (CNN). A typical use case for CNNs is where you feed the network images and the network classifies the data. CNNs tend to start with an input “scanner” which isn’t intended to parse all the training data at once. For example, to input an image of 100 x 100 pixels, you wouldn’t want a layer with 10,000 nodes.

Rather, you create a scanning input layer of say 10 x 10 which you feed the first 10 x 10 pixels of the image. Once you passed that input, you feed it the next 10 x 10 pixels by moving the scanner one pixel to the right. This technique is known as sliding windows.

Following Layers are used to build Convolutional Neural Networks:

- INPUT [32x32x3] will hold the raw pixel values of the image, in this case an image of width 32, height 32, and with three color channels R,G,B.

- CONV layer will compute the output of neurons that are connected to local regions in the input, each computing a dot product between their weights and a small region they are connected to in the input volume. This may result in volume such as [32x32x12] if we decided to use 12 filters.

- RELU layer will apply an element wise activation function, such as the max(0,x)max(0,x)thresholding at zero. This leaves the size of the volume unchanged ([32x32x12]).

- POOL layer will perform a downsampling operation along the spatial dimensions (width, height), resulting in volume such as [16x16x12].

- FC (i.e. fully-connected) layer will compute the class scores, resulting in volume of size [1x1x10], where each of the 10 numbers correspond to a class score, such as among the 10 categories of CIFAR-10. As with ordinary Neural Networks and as the name implies, each neuron in this layer will be connected to all the numbers in the previous volume.

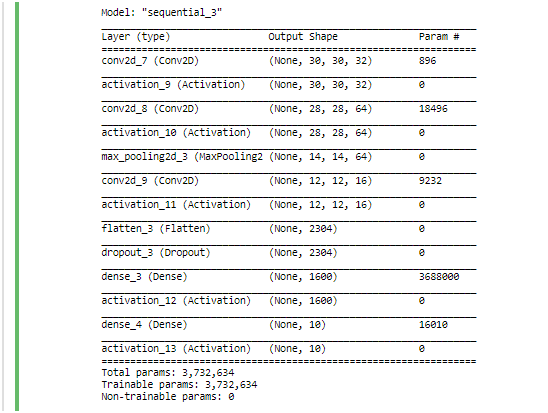

Output of the Model History

In this way, ConvNets transform the original image layer by layer from the original pixel values to the final class scores. Note that some layers contain parameters and other don’t. In particular, the CONV/FC layers perform transformations that are a function of not only the activations in the input volume, but also of the parameters (the weights and biases of the neurons). On the other hand, the RELU/POOL layers will implement a fixed function. The parameters in the CONV/FC layers will be trained with gradient descent so that the class scores that the ConvNet computes are consistent with the labels in the training set for each image.

Conclusion

The above content focuses on image classification only and the architecture of deep learning used for it. But there is more to computer vision than just classification task. The detection, segmentation and localization of classified objects are equally important. We will see these in next blog.

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.