Data is like the new oil. A large number of companies are leveraging artificial intelligence and big data to mine these vast volumes of data in today’s time. Data science is a promising landmine of job opportunities – and it’s high time to consider it as a successful career avenue.

The prospect of data science is skyrocketing. Today, it is estimated that more than 50000 data science and machine learning jobs are lying vacant. Plus, nearly 40000 new jobs are to be generated in India alone by 2020. If you follow the global trends, the role of data scientist has expanded over 650% since 2012 yet only 35000 people in the US are skilled enough.

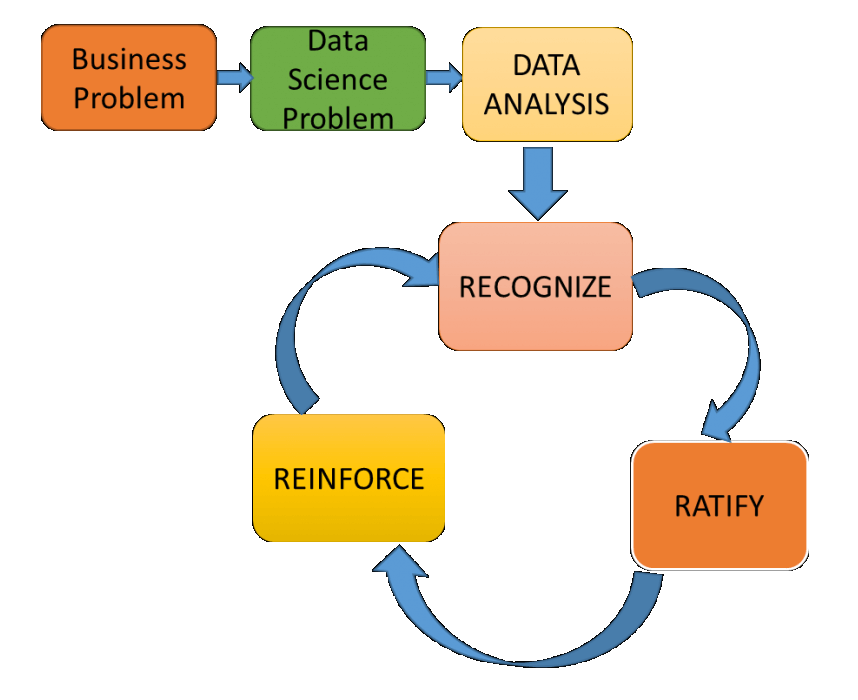

Data scientists are like the platform that connects the dots between programming and implementation of data to solve challenging business intricacies – says Pankaj Muthe, Academic Program Manager (APAC), Company Spokesperson, QlikTech. The company delivers intuitive platform solutions for embedded analytics, self-service data visualizations and guided analytics and reporting across the globe.

According to a pool of experts, data science is the hottest job trend of this century and is the second most popular degree to have at the master level next to MBA. No wonder, this new breed of science and technology is believed to be driving a new wave of innovation! Data scientists and front-end developers attracted the highest remuneration across Indian startups throughout 2017.

Eligibility Criteria

To become a professional data scientist, a degree in computer science/engineering or mathematics is a must. Most of the data scientists have a knack for intricate tasks and aptitude to learn challenging programming languages. Any good organization seeks interested and intelligent candidates with the zeal to learn more. The subjects in which they need to be proficient are mathematics, statistics and programming. Moreover, data science jobs need a very sound base in machine learning algorithms, statistical modeling and neural networks as well as incredible communication skills.

Today, a lot of institutes offer state-of-the-art data science online courses that prove extremely beneficial for career growth and expansion. Combining theoretical knowledge and technical aspects of data science training, these institutes provide skill and assistance to develop real-world applications. DexLab Analytics is one such institute that is located in the heart of Delhi NCR. For more, feel free to reach us at <www.dexlabanalytics.com>

Future Prospects

After land, labour and capital, data ranks as the fourth factor of production. According to the US Department of Statistics, the demand for data engineers is likely to grow by 40% by 2020. If you are looking for a flourishing career option, this is the place to be: an entry-level engineer begins their career as a business analyst and then proceeds towards becoming a project manager. Later, after years of experience, these virgin business analysts further get promoted to become chief data officers.

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.