Almost 44 million people across the globe suffer from Alzheimer’s disease. The cost of the treatment amounts to approximately one percent of the global GDP. Despite cutting-edge developments in medicine and robust technology upgrades, prior detection of neurodegenerative disorder, such as Alzheimer’s disease remains an upfront challenge. However, a breed of Indian researchers has assayed to apply big data analytics to look for early signs of the Alzheimer’s in the patients.

The whip-smart researchers from the NBRC (National Brain Research Centre), Manesar have come up with a fierce big data analytics framework that will implement non-invasive imaging and other test data to detect diagnostic biomarkers in the early stages of Alzheimer’s.

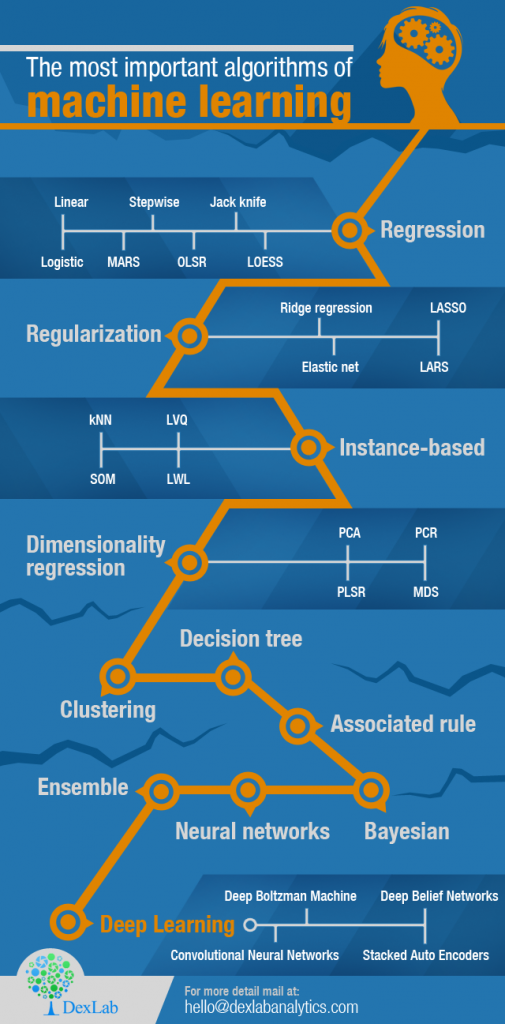

The Hadoop-powered data framework integrates data from brain scans in the format of non-invasive tests – magnetic resonance spectroscopy (MRS), magnetic resonance imaging (MRI) and neuropsychological test results – by employing machine learning, data mining and statistical modeling algorithms, respectively.

The framework is designed to address the big three Vs – Variety, Volume and Velocity. The brain scans conducted using MRS or MRI yields vast amounts of data that is impossible to study manually or analyze data of multiple patients to determine if any pattern is emerging. As a result, machine learning is the key. It boosts the process, says Dr Pravat Kumar Mandal, a chief scientist of the research team.

To know more about the machine learning course in India, follow DexLab Analytics. This premier institute also excels in offering state of the art big data courses in Delhi – take a look at their course itinerary and decide for yourself.

The researchers are found using data about diverse aspects of the brain – neurochemical, structural and behavioural – accumulated through MRS, MRI and neuropsychological mediums. These attributes are ascertained and classified into collectives for clear diagnosis by doctors and pathologists. The latest framework is regarded as a multi-modalities-based decision framework for early detection of Alzheimer’s, clinicians have noted in their research paper published in journal Frontiers in Neurology. The project has been termed BHARAT and has been dealing with the brain scans of Indians.

The new framework integrates unstructured and structured data, processing, storage, and possesses the ability to analyze volumes and volumes of complex data. For that, it leverages the skills of parallel computing, data organization, scalable data processing and distributed storage techniques, besides machine learning. Its multi-modal nature helps in classifying between healthy old patients with mild cognitive impairment and those suffering from Alzheimer’s.

Other such big data tools for early diagnostics are only based on MRI images of patients. Our model incorporates neurochemical-like antioxidant glutathione depletion analysis from brain hippocampal regions. This data is extremely sensitive and specific. This makes our framework close to the disease process and presents a realistic approach,” says Dr Mandal.

As endnotes, the research team comprises of Dr Mandal, Dr Deepika Shukla, Ankita Sharma and Tripti Goel, and the research is supported by the adept Ministry of Department of Science and Technology. The forecast predicts the number of patients diagnosed with Alzheimer is expected to cross 115 million-mark by 2050. Soon, this degenerative neurological disease will pose a huge burden on the economies of various countries; hence it’s of paramount importance to address the issue now and in the best way possible.

The blog has been sourced from — www.thehindubusinessline.com/news/science/big-data-may-help-get-new-clues-to-alzheimers/article26111803.ece

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.