Every person believes that he/she is above average. Businesses feel the same way about their best asset— data. They want to believe that their big data is above average and perfect for implementing advanced big data tools. But, that’s not the case always.

Do you really need big data tools?

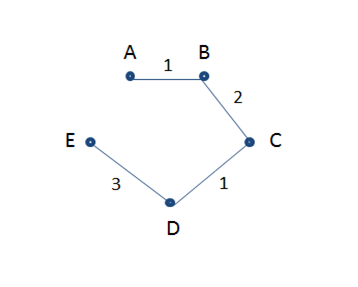

In the data world, big data tools like Hadoop Spark and NoSQL are like freight trains delivering goods. Freight trains are powerful, but they’ve limited routes and a slow start. They are great for delivering goods in bulk regularly. However, if you need a swift delivery, freight train might not be the best choice.

So firs of all, it is important to understand if there’s a big data scenario in your business or not.

A 100 times increase in data velocity, volume or variety indicates that you have a big data situation at hand. For example, if data velocity increases to hundreds of thousands of transactions per hour from thousands of transactions, or if the data sources shoot up from dozens to hundreds, you can safely conclude that your business is dealing with big data.

In such scenarios, you are likely to get frustrated with traditional SQL tools. A complete revamp or moderate tuning of existing big data tools is needed to effectively handle such massive data sets.

What tools to use?

The tool to be used depends on the task at hand. For main business outcomes like sales, payments, etc., traditional reporting tools employed within the data warehouse architecture are suitable. For secondary business outcomes like following the customer journey in detail, tracking browsing history and monitoring device activity, big data tools within data warehouse are necessary. In a data warehouse these events are aggregated into models that show the summarized business processes.

Incorporating Big Data Tools in Data Warehouse

Consider an alarm company with sensors that are connected though the internet across an entire country. Storing the response of individual sensors in a SQL data warehouse would incur huge expenses, but no value. An alternative storage solution is retaining this information in data lake environments that are cheaper and later aggregating them in a data warehouse. For example, the company could define sensor events that constitute a person locking up a house. A fact table recording departures and arrivals could be stoked up in a data warehouse as an aggregate event.

There are many other use cases. Some are given below:

Sum up and filter IoT data: A leading bed manufacturing company uses biometric sensors in their range of luxury mattresses. Apache Hadoop could be used to store individual sensor readings and Apache Spark can be employed to amass and filter signals. The aggregated data in data warehouses can be used to create time-trended reports once the boundary metrics are surpassed.

Merge real-time data with past data: Financial institutes need live access to market data. However, they also need to store that data and use it for identifying historical trends in future. Merging these two types of data with tools like Apache Kafka or Amazon Kinesis is important because, with these tools the data can be directly streamed to visualization tools and there’s hardly any delay.

The ultimate goal is to form a balance between the two sides of the data pipeline. While it is important to collect as much raw data about customers as possible, it is equally important to use the right tool for the right job.

To read more blogs on the latest developments in the field of big data, follow DexLab Analytics. We are a premier Hadoop training institute in Gurgaon. To aid your big data dreams, we have started a new admission drive #BigDataIngestion where we offer flat 10% discount to all students interested in our big data Hadoop courses. Enroll now!

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.