Keen to increase your salary – perhaps you’ve accomplished a difficult task and in a position to ask for a salary-hike? Or maybe, it’s time you want to make a switch?

Whatever be the reason, in both the abovementioned cases, the crux is a salary hike – but how to do it well? Salary negotiations are one of the toughest battle fought inside the boardrooms. Interestingly, only 39 percent of professionals even tried to negotiate a higher salary during their last job offer, says a 2018 survey of close to 3,000 people conducted by global staffing firm Robert Half.

Below, we’ve handpicked few of the best ways to enhance your salary without raising an eyebrow – scroll below for such key pieces of advice:

Never Lose Your Calm

Emotional intelligence is to be demonstrated. Not impatience. You are yet to get that job, and your salary negotiation skill is a reflection how you are going to do business, while remaining calm under stressful situations.

Do Your Homework

“Be confident in your own skin! Your salary negotiations can deeply suffer owing to a lack of preparation,” says Jim Johnson, senior vice president at Robert Half Technology. This firm generates an annual salary guide for more than 75 positions in IT field, with data!

In addition, Mr. Johnson supports weighing the competitiveness of your current pay. That’s important. Not only subject to your role or designation, but also to your respective skills, vertical industry and area – including security and data analytics.

Certifications Help

Today, an array of certified and non-certified in-demand skills is available in the market. As a result, IT professionals are found shelling extra pounds for these certifications – an average of 7.6 percent of base salary for a single certification and 9.4 percent of base salary on average for certain single, non-certified skills.

Amidst all, Apache Spark Progamming Training, Data Science, Cryptography and Penetration Testing are the hottest in line. Python Course in Delhi NCR, Artificial Intelligence and Risk Analytics are next to follow.

Other than that, open source skills are quite popular – especially those that concerns DevOps, cloud and containers.

Imbibe Soft Skills As Much As You Can

Developing soft skills is an art! And in this tough age of digital transformation, IT professionals have to constantly to work in cross-functional teams with fellows from different arenas of the business, as well as clients and partners who have zero tech skills.

For this and more, you have to have a good command over English, undying patience and understand people, what they have to say! No wonder, many IT bigwigs say these soft skills are not as soft as they sound – sometimes, it’s really hard to explain and teach people from different parts of the industry.

“It’s funny that we even talk about these skills as ‘soft,’ because they are very hard to master and are frequently the cause of more trouble than lack of ‘hard’ skills,” shares Anders Wallgren, CTO at Electric Cloud.

Care to nurture your data analytics skill? The expert guys at DexLab Analytics are here!

The blog has first appeared on ― enterprisersproject.com/article/2018/11/what-best-way-increase-your-salary-it-professional

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

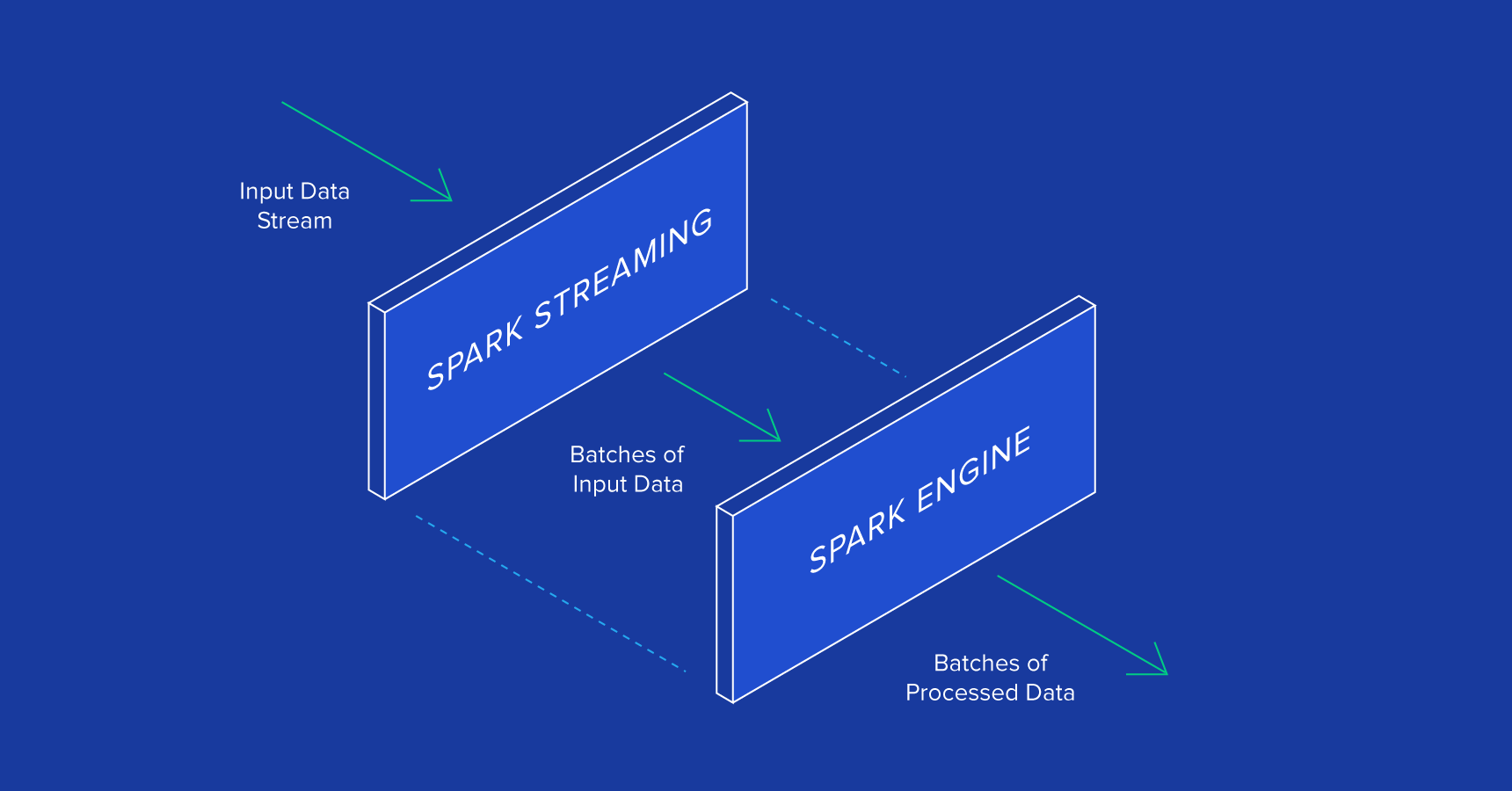

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.