Pipes in R

Pipe, written as “%>%“ is basically an efficient operator, supplied by magrittr R package. The pipe operator is notably famous due to its wide range of use in dplyr and by the proficient dplyr users. The usage of pipe operator allows one to write “sin(5)” as “5 %>% sin“, which is inspired by F#‘s pipe-forward operator “|>” and is further characterised by:

let (|>) x f = f x

Direct substitution to the above problem is not actually performed by the magrittr pipe. The reason being, “5 %>% sin” is assessed in a different environment than “sin(5)” and would be (unlike F#‘s “|>“).

The environment change is explained below:

library(“dplyr”)

f <- function(…) {print(parent.frame())}

f(5)

## <environment: R_GlobalEnv>

5 %>% f

## <environment: 0x1032856a8>

An example of Debugging

Derived from the “Chaining” section of “Introduction to dplyr“, study the following example

library(“dplyr”)

library(“nycflights13”)

flights %>%

group_by(year, month, day) %>%

select(arr_delay, dep_delay) %>%

summarise(

arr = mean(arr_delay, na.rm = TRUE),

dep = mean(dep_delay, na.rm = TRUE)

) %>%

filter(arr > 30 | dep > 30)

## Adding missing grouping variables: `year`, `month`, `day`

## Source: local data frame [49 x 5]

## Groups: year, month [11]

##

## year month day arr dep

## <int> <int> <int> <dbl> <dbl>

## 1 2013 1 16 34.24736 24.61287

## 2 2013 1 31 32.60285 28.65836

## …

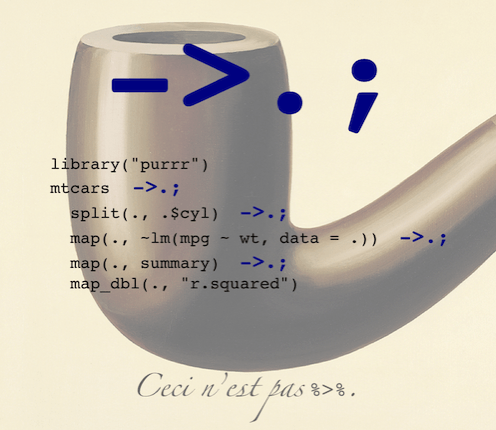

Use of Bizarro Pipe

Sometimes, the technical translation from a magrittr pipeline to a Bizarro pipeline is essential. It is easier; make all the first arguments replace with “dot” and change the operator “%>%” with the Bizarro pipe glyph: “->.;“.

Re-running of the modified code is suggestible by pasting into R’s command console and this is what follows:

flights ->.;

group_by(., year, month, day) ->.;

select(., arr_delay, dep_delay) ->.;

## Adding missing grouping variables: `year`, `month`, `day`

summarise(.,

arr = mean(arr_delay, na.rm = TRUE),

dep = mean(dep_delay, na.rm = TRUE)

) ->.;

filter(., arr > 30 | dep > 30)

## Source: local data frame [49 x 5]

## Groups: year, month [11]

##

## year month day arr dep

## <int> <int> <int> <dbl> <dbl>

## 1 2013 1 16 34.24736 24.61287

## 2 2013 1 31 32.60285 28.65836

## …

The warning issued by dplyr::select() is evident (though we just pasted the entire array of commands, all at once). This means despite help(select) saying “select() keeps only the variables you mention” this example is depending on the (useful) accommodation that dplyr::select() preserves grouping columns in addition to user specified columns (though this accommodation is not made for columns specified in dplyr::arrange()).

Read Also: Training the Future to be BigData Analytics Fluent: What led two Gurgaon-based entrepreneurs to entice youngsters into learning analytics?

A Caveat

Mind it, making an assignment at the end of the pipe is crucial and not at the beginning to ascertain a value from a Bizarro pipe. No one should write the following:

VARIABLE <-

flights ->.;

group_by(., year, month, day)

as it captures a value from the beginning and not at the end.

The correct write-up is:

flights ->.;

group_by(., year, month, day) -> VARIABLE

Read Also: The Rise of Influential Women Who Made It Big in Big Data

Turning Compositions More Eager

Bizarro pipe makes the things more eager, which still pose some challenges. What we can do is introduce an error and display how to localize errors in front of lazy eval data structures.

In the following example, ‘month’ is misspelled as ‘moth’, though intentionally. The error is not revealed, not until printing, which means it has been identified after we finished structuring the pipeline.

s <- dplyr::src_sqlite(“:memory:”, create = TRUE)

flts <- dplyr::copy_to(s, flights)

flts ->.;

group_by(., year, moth, day) ->.;

select(., arr_delay, dep_delay) ->.;

summarise(.,

arr = mean(arr_delay, na.rm = TRUE),

dep = mean(dep_delay, na.rm = TRUE)

) ->.;

filter(., arr > 30 | dep > 30)

## Source: query [?? x 5]

## Database: sqlite 3.11.1 [:memory:]

## Groups: year, moth

## na.rm not needed in SQL: NULL are always droppedFALSE

## na.rm not needed in SQL: NULL are always droppedFALSE

## Error in rsqlite_send_query(conn@ptr, statement) : no such column: moth

We can attempt to insert dplyr into eager evaluation using the eager value landing operator “replyr::`%->%`” (from replyr package) to develop the “extra eager” Bizarro glyph: “%->%.;“.

While re-writing the code in terms of the extra eager Bizarro glyph, this is the result.

While re-writing the code in terms of the extra eager Bizarro glyph, this is the result.

install.packages(“replyr”)

library(“replyr”)

flts %->%.;

group_by(., year, moth, day) %->%.;

## Error in rsqlite_send_query(conn@ptr, statement) : no such column: moth

select(., arr_delay, dep_delay) %->%.;

summarise(.,

arr = mean(arr_delay, na.rm = TRUE),

dep = mean(dep_delay, na.rm = TRUE)

) %->%.;

## na.rm not needed in SQL: NULL are always droppedFALSE

## na.rm not needed in SQL: NULL are always droppedFALSE

filter(., arr > 30 | dep > 30)

## Source: query [?? x 5]

## Database: sqlite 3.11.1 [:memory:]

However, localization of the error is successful.

Read Also: Mastering Data: A Comprehensive Take on MDM and Analytics

Attention! Attention!

During dot debugging, whenever a statement, like dplyr::select() errors-out, it indicates the Bizarro assignment on that particular line fails to occur (normal R exception semantics). The dot will be carrying the former line value and the pasted collection of code will continue even after the failing line. Hence, it will be common to see odd results and extra errors found in the pipeline.

Advice – The first error message is RELIABLE.

The Technique

The trick is to prepare your eyes to read “->.;” or “%->%.;” as a single indivisible or atomic glyph, and not as a succession of operators, separators and variables.

This post originally appeared on – www.win-vector.com/blog/2017/03/debugging-pipelines-in-r-with-bizarro-pipe-and-eager-assignment

Interested in a career in Data Analyst?

To learn more about Machine Learning Using Python and Spark – click here.

To learn more about Data Analyst with Advanced excel course – click here.

To learn more about Data Analyst with SAS Course – click here.

To learn more about Data Analyst with R Course – click here.

To learn more about Big Data Course – click here.

R Programming, R programming certification, R Programming Training, R Programming Tutorial