Today’s workspace has turned volatile in trying to adjust to the new normal. Along with struggling to stay indoors while living a virtual life, adopting new manners of social distancing, people are also having to deal with issues like job loss, pay cut, or, worse, lack of vacancies. Different sectors are getting hit, except for those driven by cutting edge technology like Data Science, Artificial intelligence. The need to transition into a digital world is greater than ever. As per the World Economic Forum, there would be a greater push towards “digitization” as well as “automation”. This signifies the need for professionals with a background in Data Science, Artificial Intelligence in the future that is going to be entirely data-reliant.

So, what are you going to do? Sit back and wait till the storm passes over or are you going to utilize this downtime to upskill yourself with a Data Science course? With the PM stressing on how the “skill, re-skill and upskill” being the need of the hour, you can hardly afford to lose more time. Since Data Science is one of the comparatively steadier fields, that is growing despite all odds, it is time to acquire data literacy to stay relevant in a workspace that is increasingly becoming data-driven. From healthcare to manufacturing, different sectors are busy decoding the data in hand to go digital in a pandemic ridden world, and employers are looking for people who are willing to push the envelope harder to remain relevant.

What is data literacy?

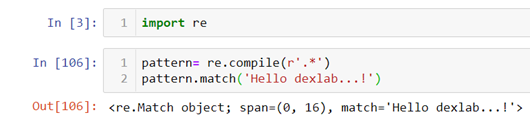

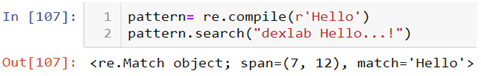

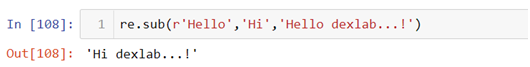

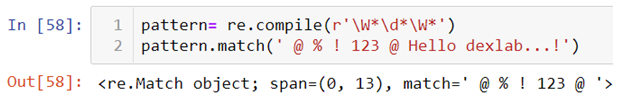

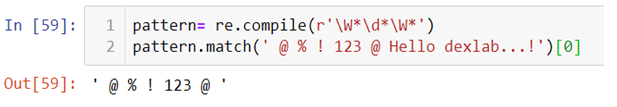

Before progressing, you must understand what data literacy even means. Data literacy basically refers to having an in-depth knowledge of data that helps the employees work with data to derive actionable information from it and channelizing that to make informed decisions. However, data literacy has a wider meaning and it is not limited to the data team comprising data scientists, no, it takes all the employees in its ambit, so, that the data flow throughout the organization is seamless. Without there being employees who know their way around data, an organization can never realize its dream of initiating a data-driven culture. Having a background in Data science using Python training is the key to achieving data literacy.

The demand for data scientists and data analysts is soaring up

Despite the ominous presence of the pandemic, the demand for Data Science professionals is there and in August, the demand for Data Analysts and Data Scientists soared. As per a recent study, in India, a Data Science professional can expect no less than ₹9.5 lakh per annum. With prestigious institutes like Infosys, IBM India, Cognizant Technology Solutions, Accenture hiring, it is now absolutely mandatory to undergo Data Science training to grab the job opportunities.

Getting Data Science certification can help you close the gap

The skill gap is there, but, that does not mean it could not be taken care of. On the contrary, it is absolutely possible and imperative that you take the necessary step of upskilling yourself to be ready for the Data Science field. Having a working knowledge of data is not enough, you must be familiar with the latest Data Science tools, must possess the knowledge to work with different models, must be familiar with data extraction, data manipulation. All of these skills and more, you would need to master before you go seeking a well-paying job.

Self-study might seem like a tempting idea, but, it is not a practical solution, if you want to be industry-ready then you must know what the industry is expecting from a Data Science professional, and only a faculty comprising industry experts can give you that knowledge while guiding you through a well designed Python for data science training course.

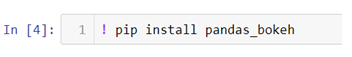

An institute such as DexLab Analytics understands the need of the hour and has a great team of industry professionals and experts to help aspiring Data Scientists and Data Analysts fulfill their dream. Along with offering state-of-the-art Data Science certification courses, they also provide courses like Machine Learning Using Python.

No matter which way you look, upskilling is the need of the hour as the world is busy embracing the power of Data Science. Stop procrastinating and get ready for the future.

.