The healthcare industry is one of the most important industries when it comes to human welfare. Research analysis from the U.S. federal government actuaries say that Americans spent $3.65 trillion on health care in 2018(report from Axios) and the Indian healthcare market is expected to reach $ 372 billion by 2022. To reduce cost and to move towards a personalized healthcare system, the industry faces three major hurdles: –

1) Electronic record management

2) Data integration

3) Computer-aided diagnoses.

Machine learning in itself is a vast field with a wide array of tools, techniques, and frameworks that can be exploited and manipulated to cope with these challenges. In today’s time, Machine Learning Using Python is proving to be very helpful in streamlining the administrative processes in hospitals, map and treat life-threatening diseases and personalizing medical treatments.

This blog will focus primarily on the applications of Machine learning in the domain of healthcare.

Real-life Application of Machine learning in the Health Sector

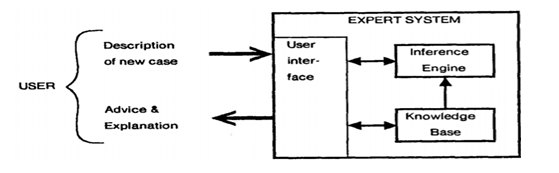

- MYCIN system was incepted at Stanford University. The system was developed in order to detect specific strains of bacteria that cause infections. It proposed a good therapy in 69% of the cases which was at that time better than infectious disease experts.

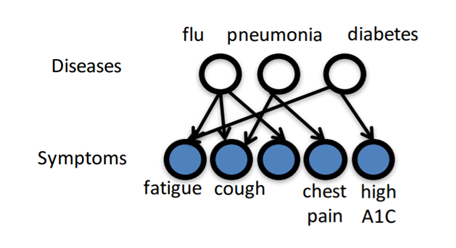

- In the 1980s at the University of Pittsburgh, a diagnostic tool named INTERNIST-I was developed to diagnose symptoms of various diseases like flu, pneumonia, diabetes and more. One of the key functionalities of the INTERNIST-I was to be able to detect the problem areas. This is done with a view of being able to remove diagnostics’ likelihood.

- AI trained by researchers from Pennsylvania has been developed recently which is capable of predicting patients who are most likely to die within a year. This is assessed based on their heart test results. This AI is capable of predicting the death of patients even if the figures look quite normal to the doctors. The researchers have trained the AI with 1.77 million electrocardiograms (ECG) results. The researchers have made two versions of this Al: one with just the ECG data and the other one with ECG data along with the age and gender of the patients.

- P1vital’s PReDicT (Predicting Response to Depression Treatment) built on the Machine Learning algorithms aims to develop a commercially feasible way to diagnose and provide treatment of depression in clinical practice.

- KenSci has developed machine learning algorithms to predict illnesses and their cure to enable doctors with the ability to detect specific patterns and indicators of population health risks. This comes under the purview of model disease progression.

- Project Hanover developed by Microsoft is using Machine Learning-based technologies for multiple purposes, which includes the development of AI-based technology for cancer treatment and personalizing drug combination for Acute Myeloid Leukemia (AML).

- Preserving data in the health care industry has always been a daunting task. However, with the forward-looking steps in analytics-related technology, it has become more manageable over the years. The truth is that even now, a majority of the processes take a lot of time to complete.

- PathAI’s technology uses machine learning to help pathologists make faster and more accurate diagnoses. Furthermore, it also helps in identifying patients who might benefit from a new and different type of treatments or therapies in the future.

Machine learning can prove to be disruptive in the medical sector by automating processes relating to data collection and collation. This is highly profitable in terms of cost-effectiveness. Newer algorithms such as Vector Machines or OCR recognition are designed to automate the task of document reading and classification with high levels of precision and accuracy.

To Sum Up:

As the modern technologies of Machine Learning, Artificial Intelligence and Big Data Analytics are tottering forth in multiple domains, there is a long path they need to walk to ensure an unflinching success. Besides, it is also important for every one of us to be accustomed to all these new-age technologies.

With an expansion of the quality Machine Learning course in India and Neural Network Machine learning Python, all the reputed institutes are joining hands together to bring in the revolution. The initial days will be slow and hard, but it is no doubt that these cutting edge technologies will transform the medical industry along with a range of other industries, making early diagnoses possible along with a reduction of the overall cost. Besides, with the introduction of successful recommender systems and other promises of personalized healthcare, coupled with systematic management of medical records, Machine Learning will surely usher in the future for good!

.