Tech unicorns, Google and Facebook have joined hands to enhance AI experience, and take it to the next level.

Last week, the two companies revealed that quite a number of engineers are working to sync Facebook’s open source machine learning PyTorch framework with Google’s TPU, or dubbed Tensor Processing Units – the collaboration is one of its kind, and a first time where technology rivals are working on a joint project in technology.

“Today, we’re pleased to announce that engineers on Google’s TPU team are actively collaborating with core PyTorch developers to connect PyTorch to Cloud TPUs,” said Rajen Sheth, Google Cloud director of product management. “The long-term goal is to enable everyone to enjoy the simplicity and flexibility of PyTorch while benefiting from the performance, scalability, and cost-efficiency of Cloud TPUs.”

Joseph Spisak, Facebook product manager for AI added, “Engineers on Google’s Cloud TPU team are in active collaboration with our PyTorch team to enable support for PyTorch 1.0 models on this custom hardware.”

2016 was the year when Google first introduced its TPU to the world at the Annual Developer Conference – that year itself the search engine giant pitched the technology to different companies and researchers to support their advanced machine-learning software projects. Since then, Google has been selling access to its TPUs through its cloud computing business instead of going the conventional way of selling chips personally to customers, like Nvidia.

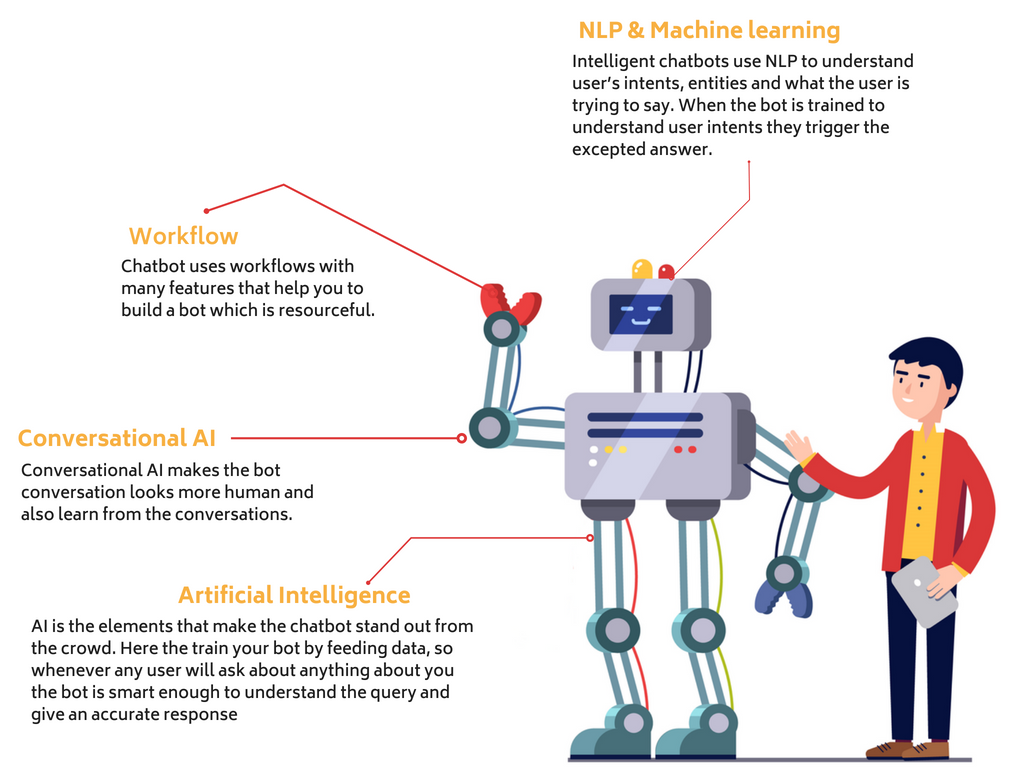

Over the years, AI technology, like Deep Learning have been widening its scopes and capabilities in association with tech bigwigs like Facebook and Google that have been using the robust technology to develop software applications that automatically perform intricate tasks, such as recognizing images in photos.

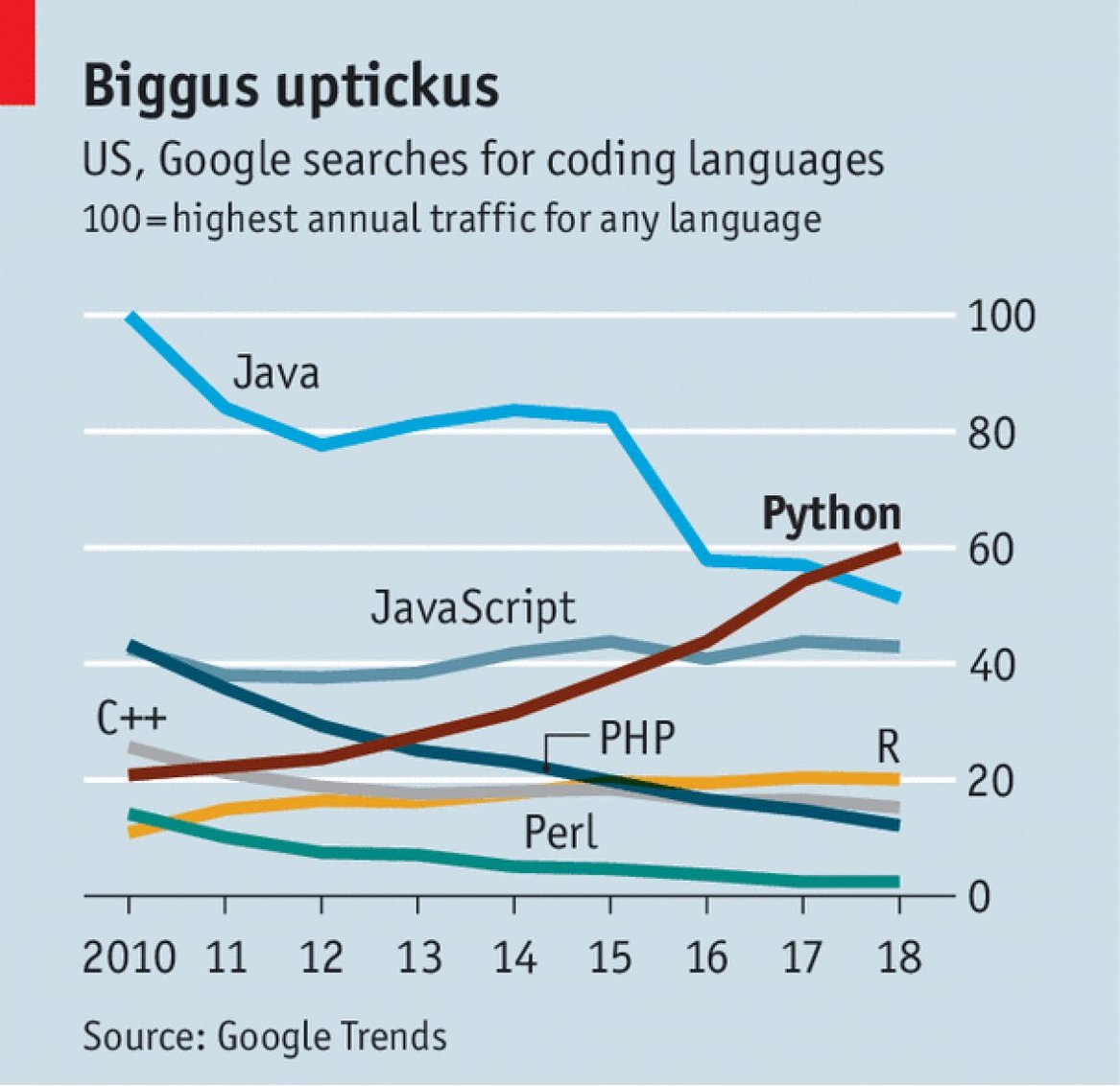

Since more and more companies are exploring the budding ML domain for years now, they are able to build their own AI software frameworks, mostly the coding tools that are intended to develop customized machine-learning powered software easily and effectively. Also, these companies are heard to offer incredible AI frameworks for free in open source models – the reason behind such an initiative is to popularize them amongst the coders.

For the last couple of years, Google has been on a drive to develop its TPUs to get the best with TensorFlow. Moreover, the initiative of Google to work with Facebook’s PyTorch indicates its willingness to support more than just its own AI framework. “Data scientists and machine learning engineers have a wide variety of open source tools to choose from today when it comes to developing intelligent systems,” shared Blair Hanley Frank, Principal Analyst, Information Services Group. “This announcement is a critical step to help ensure more people have access to the best hardware and software capabilities to create AI models.”

Besides Facebook and Google, Amazon and Microsoft are also expanding their AI investment through its PyTorch software.

DexLab Analytics offers top of the line machine learning training course for data enthusiasts. Their cutting edge course module on machine learning certification is one of the best in the industry – go check out their offer now!

The blog has been sourced from — www.dexlabanalytics.com/blog/streaming-huge-amount-of-data-with-the-best-ever-algorithm

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.