Data scientists are big data ninjas. They tackle colossal amounts of messy data, and utilize their imposing skills in statistics, mathematics and programming to collect, manage and analyze data. Next, they combine all their analytic abilities – including, industry expertise, encompassing knowledge and skepticism to unravel integral business solutions of meaningful challenges.

But how do you think they become such competent data wranglers? Years of experience or substantial pool of knowledge, or both? In this blog, we have penned down the most important interview data questions on data science – it will only aid you crack tough job interviews but also will test your knowledge about this promising field of study.

DexLab Analytics offers incredible Data Science Courses in Delhi. Start learning from the experts!

What do you mean by data science?

Data is a fine blend of statistics, technical expertise and business acumen. Together they are used to analyze datasets and predict the future trend.

Which is more appropriate for text analytics – R or Python?

Python includes a very versatile library, known as Pandas, which helps analysts use advanced level of data analysis tools and data structures. R doesn’t have such a feature. Therefore, Python is the one that’s highly suitable for text analytics.

Explain a Recommender System.

Today, a recommender system is extensively deployed across multiple fields – be it music recommendations, movie preferences, search queries, social tags, research and analysis – the recommender system works on a person’s past to build a model to predict future buying or movie-viewing or reading pattern in the individual.

What are the advantages of R?

- A wide assortment of tools available for data analysis

- Perform robust calculations on matrix and array

- A well-developed yet simple programming language is R

- It supports an encompassing set of machine learning applications

- It poses as a middleman between numerous tools, software and datasets

- Helps in developing ace reproducible analysis

- Offers a powerful package ecosystem for versatile needs

- Ideal for solving complex data-oriented challenges

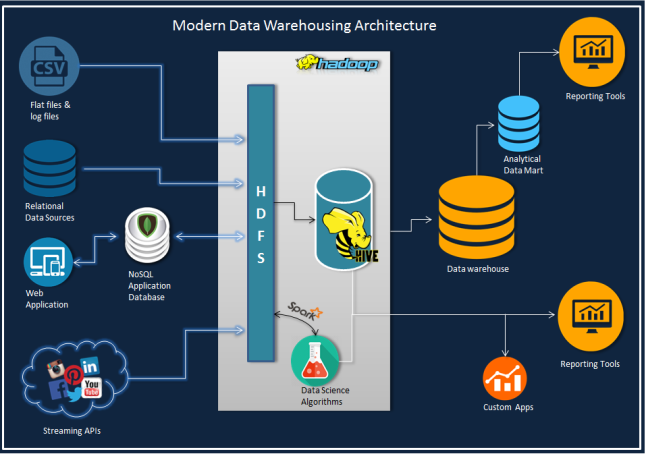

What are the two big components of Big Data Hadoop framework?

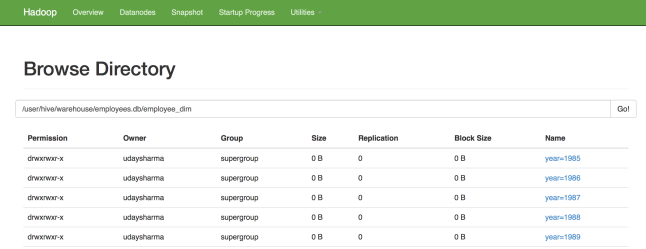

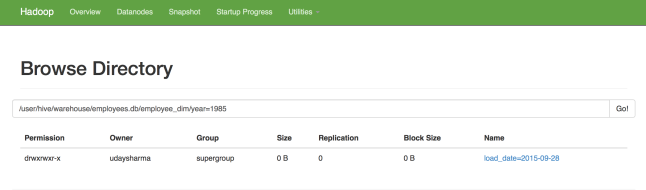

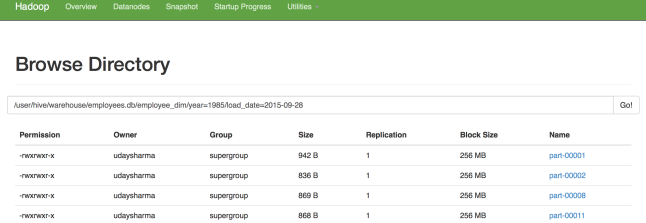

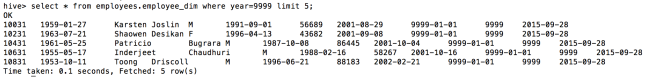

HDFS – It is the abbreviated form of Hadoop Distributed File System. It’s the distributed database that functions over Hadoop. It stores and retrieves vast amounts of data in no time.

YARN – Stands for Yet Another Resource Negotiator. It aims to allocate resources dynamically and manage workloads.

How do you define logistic regression?

Logistic regression is nothing but a statistical technique that analyzes a dataset and forecasts significant binary outcomes. The outcome has to be in either zero or one or a yes or no.

How machine learning is used in real-life?

Following are the real-life scenarios where machine learning is used extensively:

- Robotics

- Finance

- Healthcare

- Social media

- Ecommerce

- Search engine

- Information sharing

- Medicine

What do you mean by Power Analysis?

Power analysis is best defined as the process of determining sample size required for determining an impact of a given size from a cause coupled with a certain level of assurance. It helps you understand the sample size estimate and in the process aids you in making good statistical judgments.

To get an in-depth understanding on data science, enroll for our intensive Data Science Certification – the course curriculum is industry-standard, backed by guaranteed placement assistance.

The blog has been sourced from — intellipaat.com/interview-question/data-science-interview-questions

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

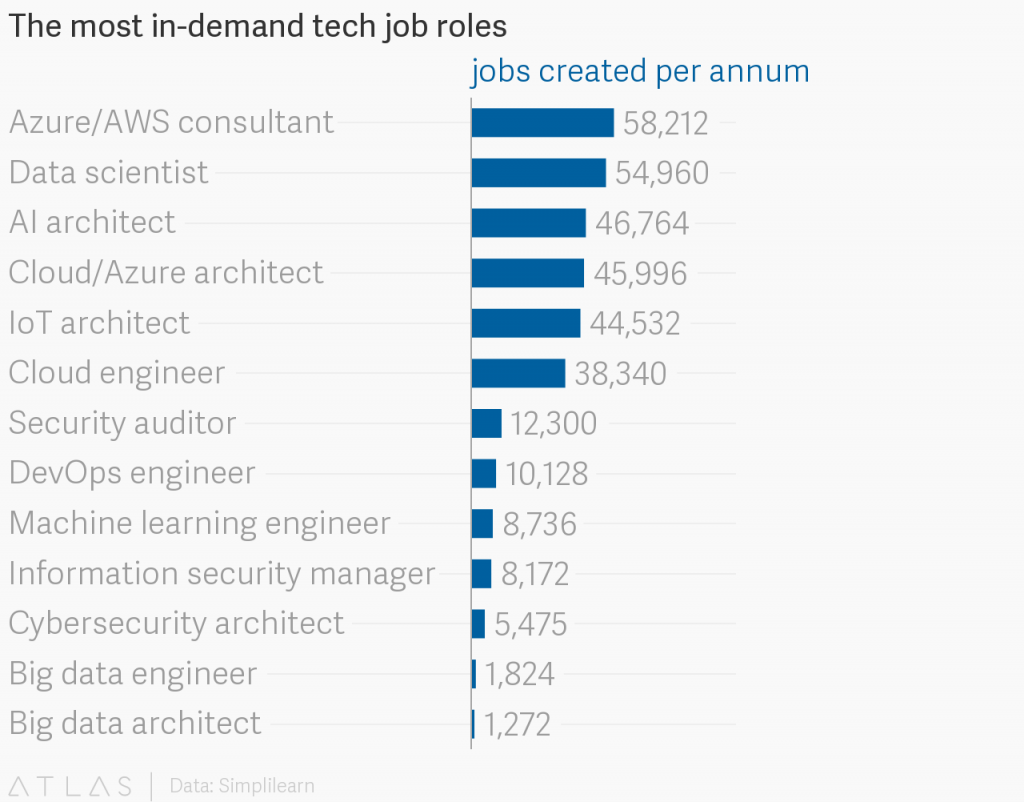

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.