The salary of analytics professionals outnumbers that of software engineers by more than 26%. The wave of big data analytics is taking the world by storm. If you follow the latest studies, you will discover that there has been a prominent growth in median salary over several experience levels in the past three years (2016 to 2018). In 2019, the average analytics salary has been capped at 12.6 lakh per annum.

The key takeaway is that the salary structure of analytics professionals continues to beat other tech-related job roles. In fact, data analysts are found out-earning their Java correspondents by nearly 50% in India alone. A latest survey provides an encompassing view of base and compensation salaries in data science along with median salaries followed across diverse job categories, regions, education profiles, experience, tools and skills.

In this regard, a spokesperson of a prominent data analytics learning institute was found saying, “The demand for AI skills is expected to increase rapidly, which is also reflected by the fact that AI engineers command a higher salary than peers.” She further added, “Many of our clients have realized that investing in data-driven skills at the leadership level is a determining factor for the success of digital and AI initiatives in the organization. With the increasing adoption of digital technologies, we expect an enduring growth of Data Science and AI initiatives to offer exciting and lucrative career options to new age professionals,”

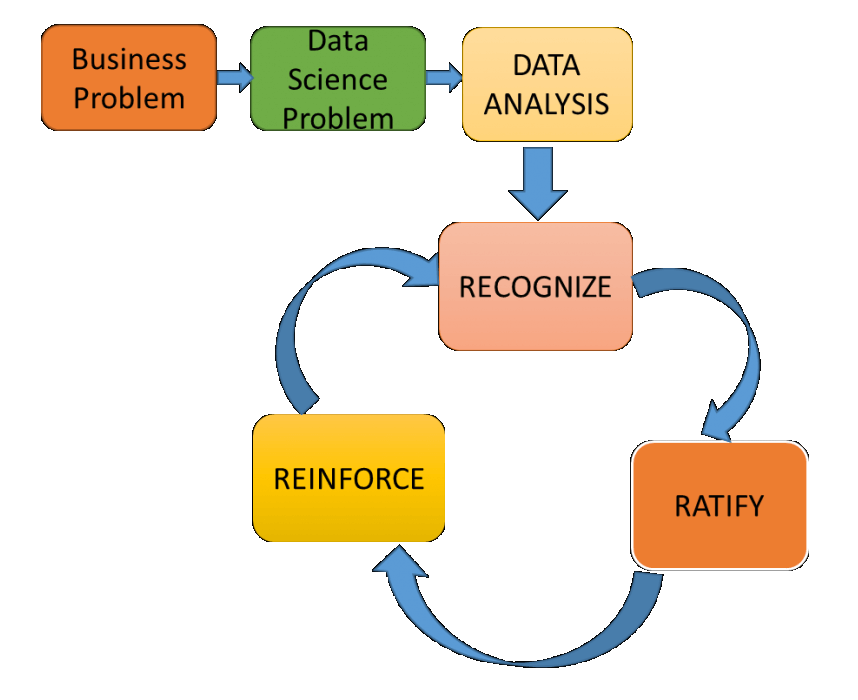

Over time, we are witnessing how markets are evolving while the demand for skilled data scientists is following an upward trend. It is not only the technology firms that are posting job offers, but the change is also evident across industries, like retail, medical, retail and CPG amongst others. These sectors are enhancing their analytical capabilities implying an automatic increase in the number of data-centric jobs and recruitment of data scientists.

Points to Consider:

- In the beginning, nearly 76% of data analysts earn 6-lakh figure per annum.

- The average analytics salary observed in 2018-19 is 12.6 lakh.

- In terms of analytics career, Mumbai offers the highest compensation of 13.7 lakh yearly, followed by Bangalore at 13 lakh.

- Mid-level professionals proficient in data analytics are more in demand.

- Knowing Python is an added advantage; Python Programming training will help you earn more. Expect a package of 15.1 lakh.

- Nevertheless, we often see a pay disparity for female data scientists against their male counterparts. While women’s take-home salary is 9.2 lakh, male from the same designation and profession earns 13.7 lakh per annum.

As endnotes, the demand for data science skills is skyrocketing. If you want to enter into this flourishing job market, this is the best time! Enroll in a good data analyst course in Delhi and mould your career in the shape of success! DexLab Analytics is a top-notch data analyst training institute that offers a plethora of in-demand skill training courses. Reach us for more.

This article has been sourced from — www.tribuneindia.com/news/jobs-careers/data-analytics-professionals-ride-the-big-data-wave/759602.html

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.