Big Data is big. We have all established that, and now we know that all the noise about Big Data is not just hype but is reality. The data generated on earth is doubling in every 1.2 years and the mountainous heap of data keep streaming in from different sources with the increase in technology.

Let us look at some data to really understand how big, Big Data is growing:

- The population of the world is 7 billion, and out of these 7 billion, 5.1 billion people use a smart phone device

- On an average every day almost 11 billion texts are sent across the globe

- 8 million videos are watched on YouTube alone

- The global number of Google searches everyday is 5 billion

But the balance has long been tipped off as we have only been creating data but not consuming it enough for proper use. What we fail to realize is the fact that we are data agents, as we generate more than 25 quintillion bytes of data everyday through our daily online activities. The behaviors that add more numbers to this monstrous hill of data are – online communications, consumer transactions, online behavior, video streaming services and much more.

The numbers of 2012 suggest that world generated more than 2 Zetabytes of data. In simpler terms that is equal to 2 trillion gigabytes. What’s more alarming is the fact that by the year 2020, we will generate 35 trillions of data. To manage this growing amount of data we will need 10 times the servers we use now by 2020 and at least 50 times more data management systems and 75 times the files to manage it all.

The industry still is not prepared to handle such an explosion of data as 80 percent of this data is mainly unstructured data. Traditional statistical tools cannot handle this amount of data, as it is not only too big, but is also too complicated and unorganized to be analyzed with the limited functions offered by traditional statistical analysis tools.

In the realm of data analysts there are only 500 thousand computer scientists, but less than 3000 mathematicians. Thus, the talent pool required to effectively manage Big Data will fall short by at least 100 thousand minds prepared to untangle the complex knots of intertwined data hiding useful information.

But to truly harness the complete potential of Big Data we need more human resource and more tools. For finding value we need to mine all this data.

Then what is the solution to this even bigger problem of tackling Big Data? We need Big Data Analytics. This is more than just a new technological avenue, but on the contrary this is fresh new way of thinking about the company objectives and the strategies created to achieve them. True understanding of Big data will help organizations understand their customers. Big Data analytics is the answer behind where the hidden opportunities lie.

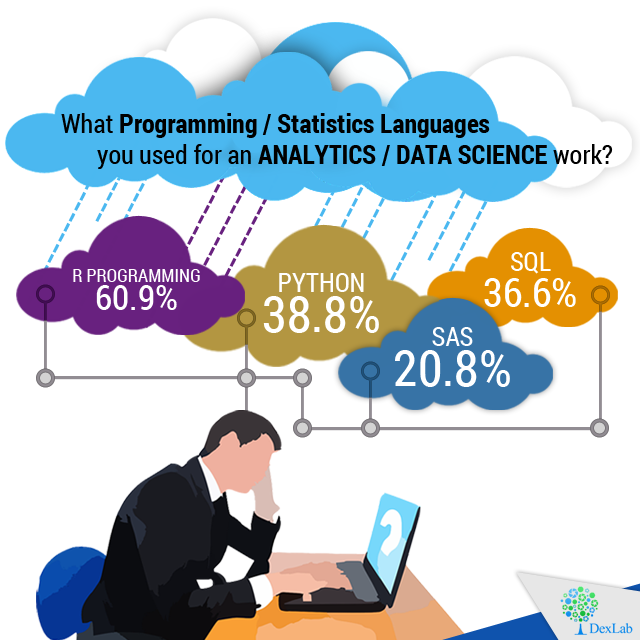

A few advanced tools that are currently in use in the data analysis industry are: SAS, R Programming, Hadoop, Pig, Spark and Hive. SAS is slowly emerging to be an increasingly popular tool to handle data analysis problems, which is why SAS experts are highly in-demand in the job market presently. To learn more about Big Data training institutes follow our latest posts in DexLab Analytics.

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.

To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.