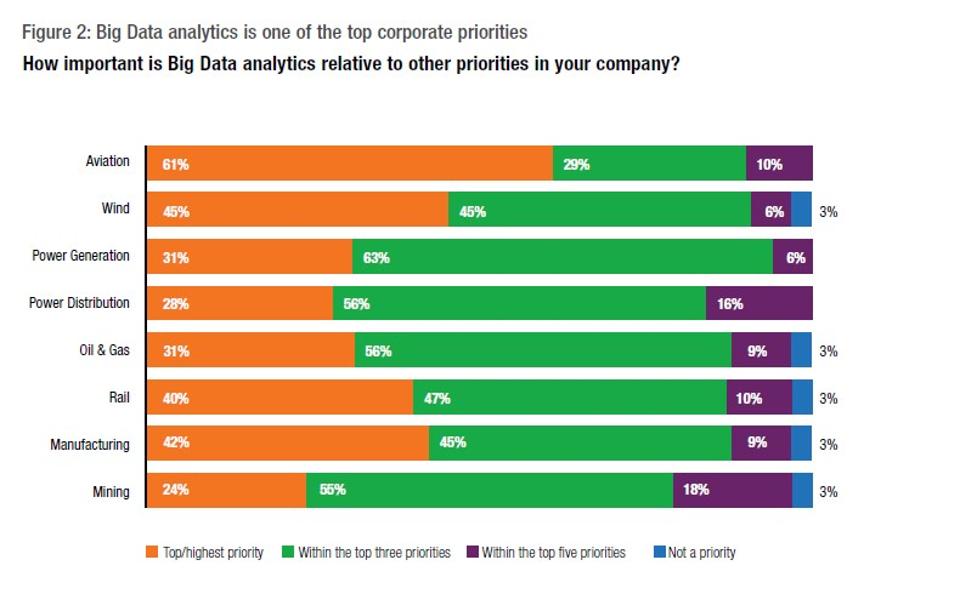

It is no new news that the Big Data and software analytics have had a huge impact on the modern industries. There are several industry disruptors that have surfaced in the past few years and totally changed the lives of all connected humans. Like Google, Tesla and Uber! These are the companies that have used the long list of benefits presented to us by Big Data to expand and infiltrate newer markets, they have helped themselves improve customer relations and enhance their supply chains in multiple segments of market.

As per the latest IDC Research projects the sale of Big Data and analytics services will rise to USD 187 billion in 2019. This will be a huge leap from the USD 122 billion which was recorded in 2015. A major industry that stands to benefit greatly from this expansion which is the manufacturing industry, with the industry revenue projections ranging to USD 39 billion by 2019.

The industry of manufacturing has come a long way from the age of craft industries. But back then, the manufacturing process involved a slow and tedious production processes which only yielded limited amounts of products.

The effects of Big Data Analytics on the Manufacturing sector:

Automated processes along with mechanization have resulted in a generation of large piles of data, which is, much more than what most manufacturing enterprises know what to do with them.

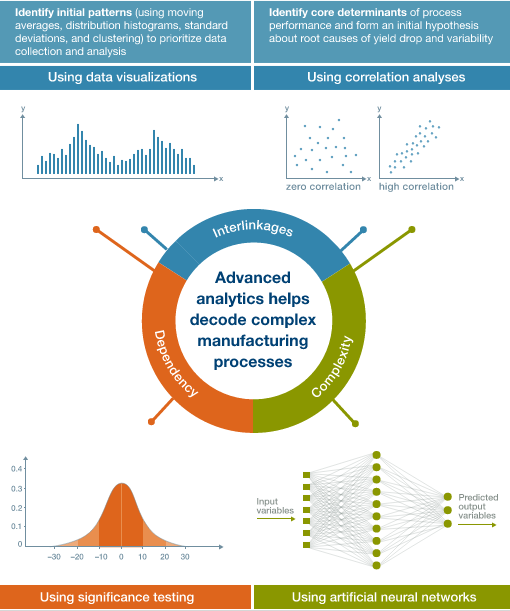

But such data can yield beneficial insights for the manufacturing units to improve their operations and increase their productivity. Here are a few notable ones:

Image Source: mckinsey.com

Savings in cost:

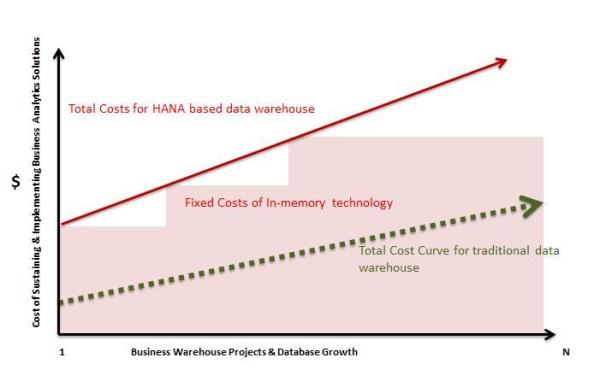

Big data analytics can really help transform the manufacturing process and revolutionize the way they are carried out. The obtained information can be used to reduce the cost of production and packaging during manufacturing. Moreover, companies which implement data analytics can also reduce the cost of transport, packaging along with warehousing. This is in turn can help inventory costs and return i huge savings.

Improvement in safety and quality:

A lot of manufacturing companies are now making use of computerised sensors during the production to sift through low quality products while on the assembly line. With the right software analytics enterprises can use the data generated from such sensors to improve the quality and safety of the products instead of simply throwing away the low quality products after the production.

Image Source: blogs-images.forbes.com

Tightening up the workforce efficiency:

They can also use this data to improve management and employee efficiency. Big data analytics can be used to study the error rates on the production floor and use that information to analyse specific regions where employees are good when they perform under pressure.

Moreover, data analytics may help to speed up the production process n the production floor. S will be especially useful for large firms, which work with large volumes of data.

Better collaboration:

A great advantage of having an IT based data collection and analysis infrastructure is improved information movement within the manufacturing organization. The synergy of flow of information within the management and engineering departments as well as in the quality control sector and between the machine operators and other departments of the company helps them work more efficiently.

The manufacturing industry is much more complex than any other industry, which have implemented the big data analytics. Companies must effectively time the implementation of this software so that there are no losses. And should also pay attention as to from where they can mine the data and the right analytics tools to use for producing feasible and actionable results.

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.