Data Lake – is a term you must have encountered numerous times, while working with data. With a sudden growth in data, data lakes are seen as an attractive way of storing and analyzing vast amounts of raw data, instead of relying on traditional data warehouse method.

But, how effective is it in solving big data related problems? Or what exactly is the purpose of a data lake?

Let’s start with answering that question –

What exactly is a data lake?

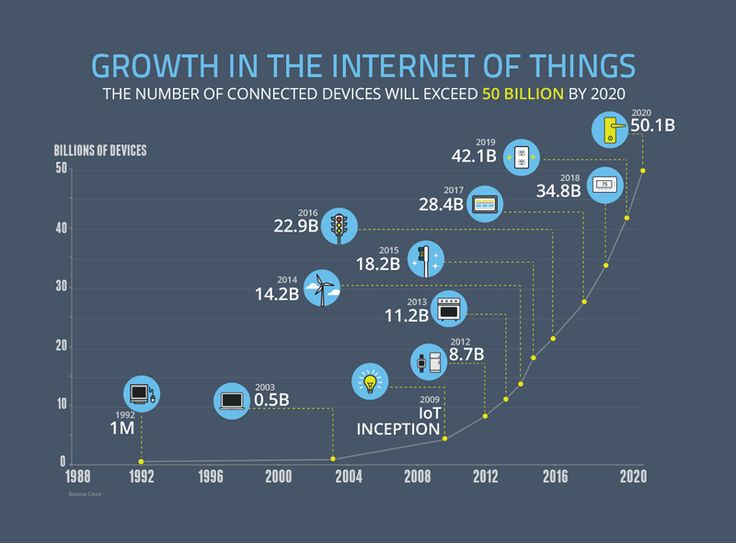

To begin with, the term ‘Data Lake’ doesn’t stand for a particular service or any product, rather it’s an encompassing approach towards big data architecture that can be encapsulated as ‘store now, analyze later’. In simple language, data lakes are basically used to store unstructured or semi-structured data that is derived from high-volume, high-velocity sources in a sudden stream – in the form of IoT, web interactions or product logs in a single repository to fulfill multiple analytic functions and cases.

What kind of data are you handling?

Data lakes are mostly used to store streaming data, which boasts of several characteristics mentioned below:

- Semi-structured or unstructured

- Quicker accumulation – a common workload for streaming data is tens of billions of records leading to hundreds of terabytes

- Being generated continuously, even though in small bursts

However, if you are working with conventional, tabular information – like data available from financial, HR and CRM systems, we would suggest you to opt for typical data warehouses, and not data lakes.

What kind of tools and skills is your organization capable enough to provide?

Take a note, creating and maintaining a data lake is not similar to handling databases. Managing a data lake asks for so much more – it would typically need huge investment in engineering, especially for hiring big data engineers, who are in high-demand and very less in numbers.

If you are an organization and lack the abovementioned resources, you should stick to a data warehouse solution until you are in a position of hiring recommended engineering talent or using data lake platforms, such as Upsolver – for streamlining the methods of creating and administering cloud data lake without devoting sprawling engineering resources for the cause.

What to do with the data?

The manner of data storage follows a specific structure that would be suitable for a certain use case, like operational reporting but the purpose for data structuring leads to higher costs and could also put a limit to your ability to restructure the same data for future uses.

This is why the tagline: store now, analyze later for data lakes sounds good. If you are yet to make your mind whether to launch a machine learning project or boost future BI analysis, a data lake would fit the bill. Or else, a data warehouse is always there as the next best alternative.

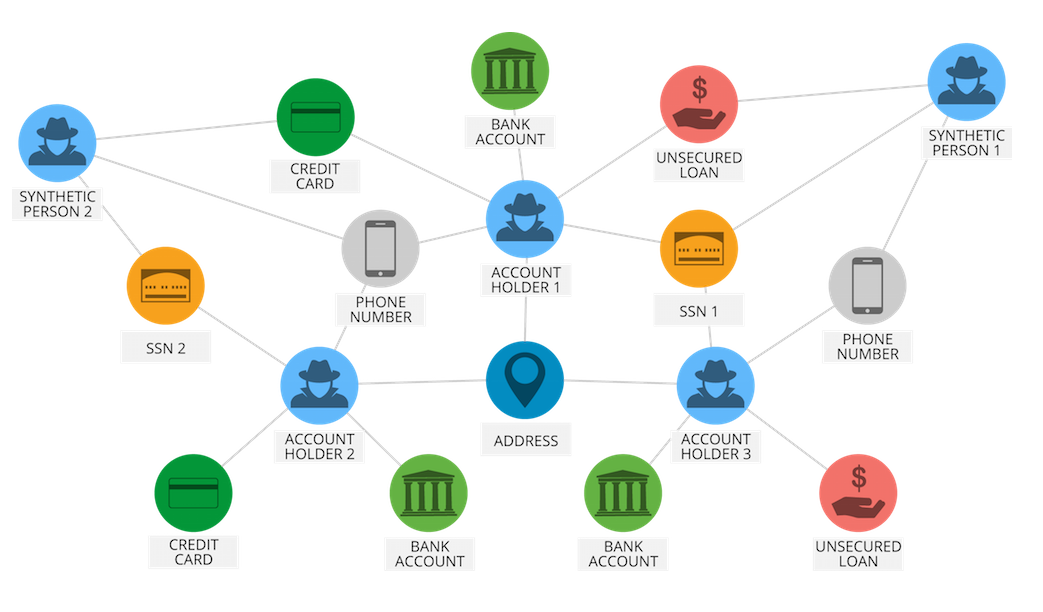

What’s your data management and governance strategy?

In terms of governance, both data warehouses and lakes pose numerous challenges – so, whichever solution you chose, make sure you know how to tackle the difficulties. In data warehousing, the potent challenge is to constantly maintain and manage all the data that comes through and adding them consistently using business logic and data model. On the other hand, data lakes are messy and difficult to maintain and manage.

Nevertheless, armed with the right data analyst certification you can decipher the right ways to hit the best out of a data lake. For more details on data analytics training courses in Gurgaon, explore DexLab Analytics.

The article has been sourced from — www.sisense.com/blog/5-questions-ask-implementing-data-lake

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.