Fifty-nine percent of the companies around the world are not using Predictive Models or Advanced Analytics – says Forbes Insights/Dun & Bradstreet Study.

A recent study by Forbes Insights and Dun & Bradstreet, “Analytics Accelerates Into the Mainstream: 2017 Enterprise Analytics Study,” elucidates the ever-increasing indispensable role that analytics play in today’s business world, all the way from devising strategies to operations. The gloomy Forbes study highlights the crucial need for immediate investment, implementation and prioritization of analytics within companies.

The survey was carried on more than 300 senior executives in Britain, Ireland and North America and the report illuminates that the leading corporate giants need to invest more on the people, the processes they use and technologies that authorize decision support and decision automation.

Bruce Rogers, chief insights officer at Forbes Media was found quoting, “This study underlines the need for continued focus and investment,” he further added, “Without sophisticated analysis of quality data, companies risk falling behind.”

“All analytics are not created equal,” said Nipa Basu, chief analytics officer, Dun & Bradstreet. She explained, “This report shows a critical opportunity for companies to both create a solid foundation of comprehensive business data – master data – and to utilize the right kind of advanced analytics. Those that haven’t yet begun to prioritize implementation of advanced analytics within their organizations will be playing catch-up for a long while, and may never fully recover.”

Key findings revealed:

Need for tools and best practices

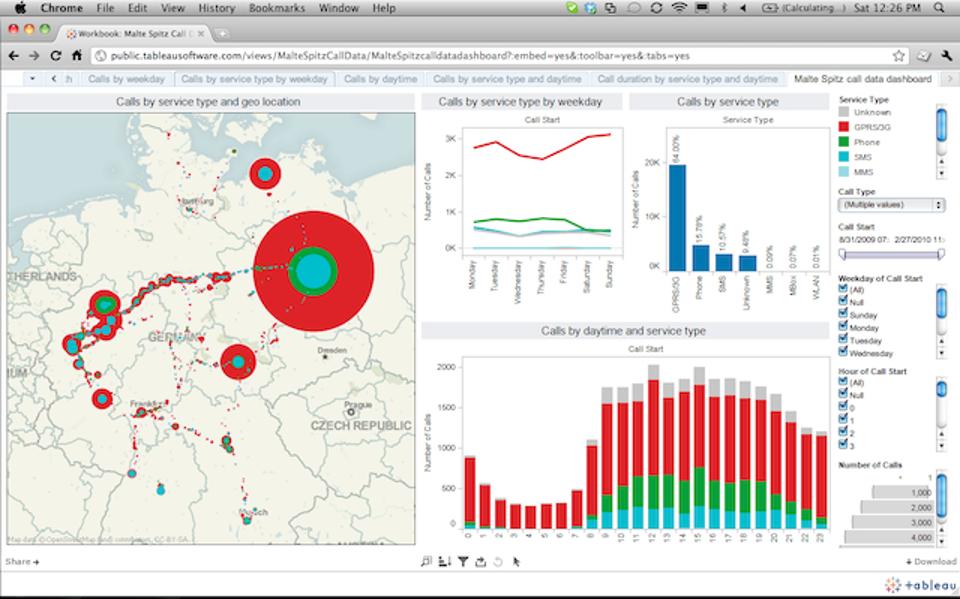

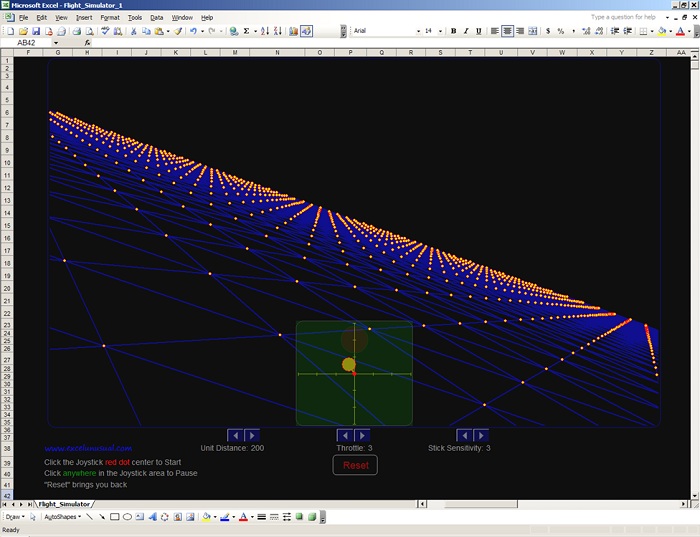

Though data usage and consumption growth brags about success, little sophistication is observed in how data are analysed. Only 23% of the surveyed candidates are found to be using spreadsheets for all sorts of data work, while another 17% uses dashboards that are a little more efficient than spreadsheets.

The survey says mere 41% rely on predictive models and/or advanced analytical and forecasting techniques, and 19% of the respondents implement no analytical tools that are more complicated than fundamental data models and regressions.

Skill deficiency stalling analytics success

Twenty seven percent of respondents diagnosed with skill gaps as a major blocker between current data and analytics efforts. Fifty two percent were found to be working with third-party data vendors to tackle such lacks of skills. Moreover, 55% of the surveyed contestants said that third-party analytics partners performs better than those who works in-house, exhibiting both a shortage of analytics capabilities among in-house analysts and a dearth in skilled workers.

Investment crunch

Survey respondents ticked lack of investment and problems with technology as the top hindrances to fulfilling their data strategy goals. Despite the increasing use of data, investment in deft personnel and technology is lagging behind.

CFO’s introspect into data for careful insights

According to the survey, 63% of those who are in the financial domains shared they are using data and analytics to discover opportunities to fund business growth. Further, 60% of the survey respondents revealed they rely on data to boost long-term strategic planning.

For more interesting blogs and data-related stuffs, follow us on DexLab Analytics. We are a leading Data Science training institute in Delhi offering high-in demand Business analyst training courses in Gurgaon. Reach us today!

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.