Welcome again!! We are back here to take up another thrilling topic and dissect it inside out to see what compelling contents are hidden within. And this time we will take up our newly launched Python Programming Training Module – Python, invented by Guido Van Rossum is a very simple, well-interpreted and goal-specific intensive programming language.

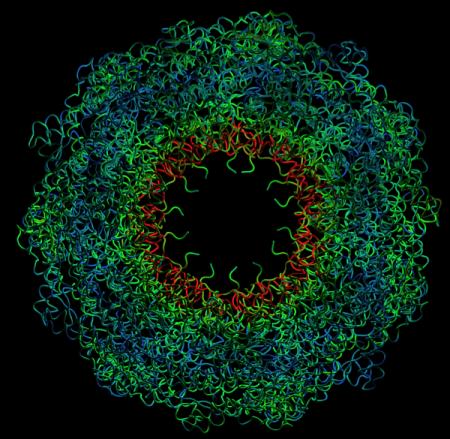

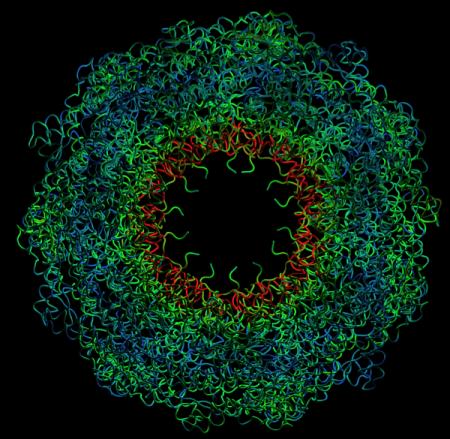

Programmers love Python. Since there is zero compilation step, debugging Python programs is a mean feat. In this blog, we will chew over The Molecular Modeling Toolkit (MMTK) – it’s an open source Python library for molecular modeling and simulation. Composed of Python and C, MMTK eyes on bio-molecular systems with its conventional standard techniques and schemes, like Molecular Dynamics coupled with new techniques based on a platform of low-level operations.

Get a Python certification today from DexLab Analytics – a premier data science with python training institute in Delhi NCR.

It was 1996, when the officials from Python Org, including Konrad Hinsen (He was then involved in the Numerical Python project, but currently working as a researcher in theoretical physics at the French Centre National de la Recherche Scientifique (CNRS). He is also the author of ScientificPython, a general-purpose library of scientific Python code) started developing MMTK. They initially had a brush off with mainstream simulation packages for biomolecules penned down by Fortran, but those packages were too clumsy to implement and especially modify and extend. In order to develop MMTK, modifiability was a crucial criterion undoubtedly and they gave it utmost attention.

The language chosen

The selection of language took time. The combination of Python and C was an intuitive decision. The pundits of Python were convinced that only a concoction of a high-level interpreted language and a CPU-efficient compiled language could serve their purpose well, and nothing short of that.

For the high-level segment, Tcl was rejected because it won’t be able to tackle such complex data structures of MMTK. Perl was also turned down because it was made of unfriendly syntax and an ugly integrated OO mechanism. Contrary to this, Python ranked high in terms of library support, readability, OO support and integration with other compiled languages. On top of that, numerical Python was just released during that time and it turned out to be a go-to option.

Now, for the low-level segment, Fortran 77 was turned down owing to its ancient character, portability issues and low quality memory management. Next, C++ was considered, but finally it was also rejected because of portability issues between compilers in those days.

The architecture of library

The entire architecture of MMTK is Python-centric. For any user, it will exude the vibes of a pure Python library. Numerical Python, LAPACK, and the netCDF library functions are observed extensively throughout MMTK. Also, MMTK offers multi-threading support for MPI-based parallelization for distributed memory machines and shared memory parallel machines.

The most important constituent of MMTK is a bundle of classes that identify atoms and molecules and control a database of fragments and molecules. Take a note – biomolecules (mostly RNA, DNA and proteins) are administered by subclasses of the generic Molecule class.

Extendibility and modularity are two pillars on which Python MMTK model is based. Without going under any modification of MMTK code, several energy terms, data type specializations and algorithms can be added anytime. Because, the design element of MMTK is that of a library, and not some close program, making it easier to run applications.

Note Bene: MMTK at present includes 18,000 lines of Python code, 12,000 lines of hand-written C code, and several machine-generated C codes. Most of the codes were formulated by one person during eight years as part of a research activity. The user community provided two modules, few functions and many ideas.

For more information, peruse through Python Training Courses Noida, offered by DexLab Analytics Delhi. They are affordable, as well as program-centric.

This article is sourced from – www.python.org/about/success/mmtk

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.

To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.