Python has become the lingua franca of the computing world. It has come to become the most sought after programming language for deep learning, machine learning and artificial intelligence. It is a favourite with programmers because it is easy to understand and learn and it achieves a lot more in terms of productivity as compared to other languages.

Python is a dynamic, high-level, general-purpose programming language that is useful for developing desktop, web and mobile applications that can also be used for complex scientific and numeric applications, data science, AI etc. Python focuses a lot on code readability.

From web and game development to machine learning, from AI to scientific computing and academic research, Data science and analysis, python is regarded as the real deal. Python is useful in domains like finance, social media, biotech etc. Developing large software applications in Python is also simpler due to its large amount of available libraries.

The Python developer usually deals with backend components, apps connection with third-party web services and giving support to frontend developers in web applications. Of course, one might create applications with use of different languages but pretty often Python is the language chosen for it – and there are several reasons for that.

In this article, we will walk through a structured approach to top 8 skills required to become a Python Developer. These skills are:

- Core Python

- Good grasp of Web Frameworks

- Front-End Technologies

- Data Science

- Machine Learning and AI

- Python Libraries

- Multi-Process Architecture

- Communication Skills

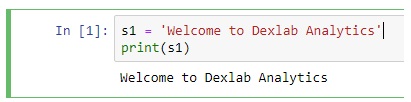

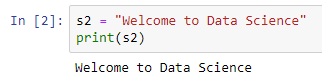

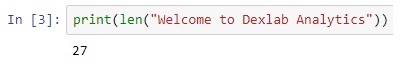

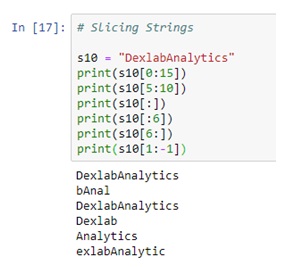

Core Python

This is the foundation of any Python developer. If one wants to achieve success in this career, he/she needs to understand the core python concepts. These include the following:

- Iterators

- Data Structures

- Generators

- OOPs concepts

- Exception Handling

- File handling concepts

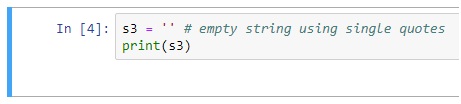

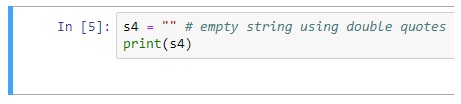

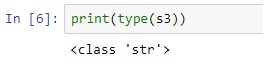

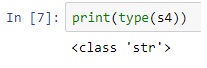

- Variables and data types

However, learning the core language (as mentioned above) is only the first step in mastering this language and becoming a successful Python developer.

Good grasp of Web Frameworks

By automating the implementation of redundant tasks, frameworks cut development time and enable developers to focus greatly on application logic rather than routine elements.

Because it is one of the leading programming languages, there is no scarcity of frameworks for Python. Different frameworks have their own set of advantages and issues. Hence, the selection needs to be made on the basis of project requirements and developer preference. There are primarily three types of Python frameworks, namely full-stack, micro-framework, and asynchronous.

A good Python web developer has incredible honing over either of the two web frameworks Django or Flask or both. Django is a high-level Python Web Framework that encourages a good, clean and pragmatic design and Flask is also widely used Python micro web framework.

Front-End Technologies (JavaScript, CSS3, HTML5)

Sometimes, Python developers must work with the frontend team to match together the server-side and the client-side. This means Python developers need a basic understanding of how the frontend works, what’s possible and what’s not, and how the application will appear.

While there is likely a UX team, SCRUM master, and project or product manager to coordinate the workflow, it’s still good to have a basic understanding of front-end tasks.

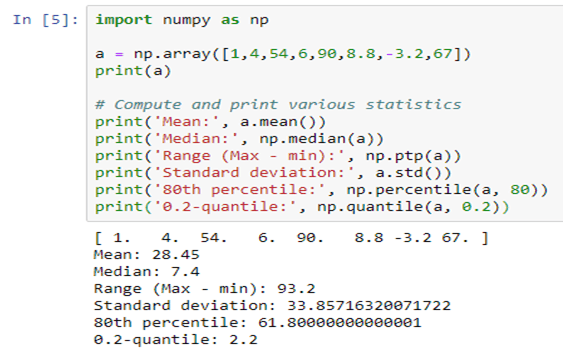

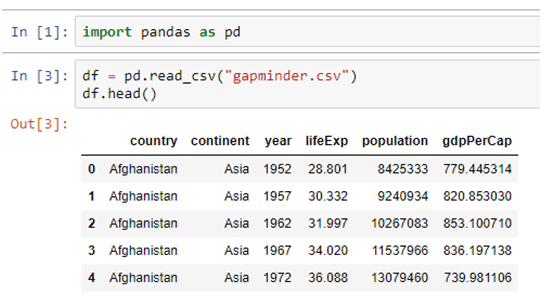

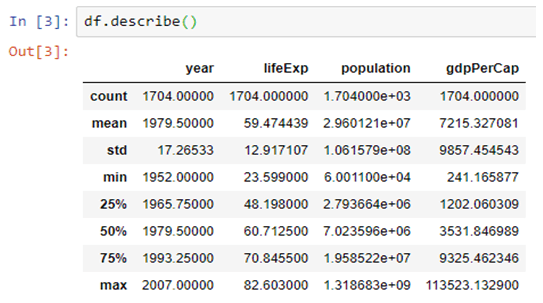

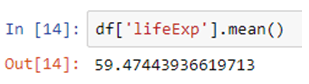

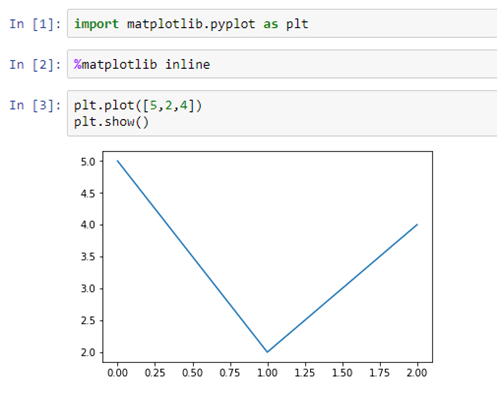

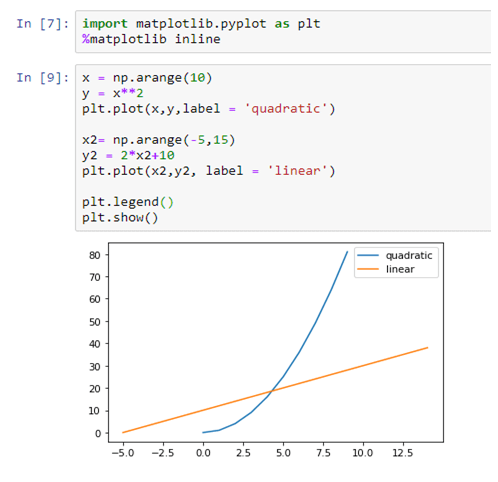

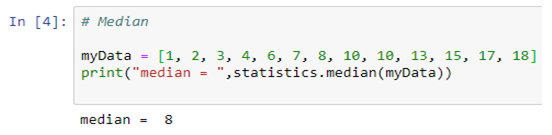

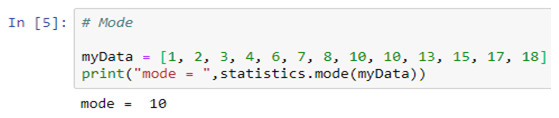

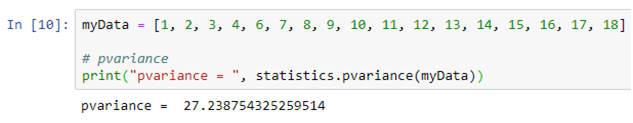

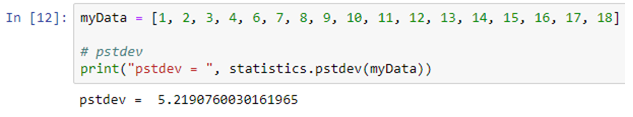

Data Science

Data science offers a world of new opportunities. Being a Python developer, there are several prerequisites you need to know starting with things you learn in high school mathematics, such as statistics, probability, etc. Some of the other parts of data science you need to understand, and use include SQL knowledge; the use of Python packages, data wrangling and data cleanup, analysis of data, and visualization of data.

Artificial Intelligence and Machine Learning

Artificial Intelligence and Machine Learning (as well as Deep Learning) are constantly growing. Python is the perfect programming language which is used in all the frameworks of Machine Learning and Deep Learning. This will be a huge plus for someone if he/she knows about this domain. If someone is into data science, then definitely digging in the Machine Learning topic would be a great idea.

Python Libraries

Python libraries certainly deserve a place in every Python Developer’s toolbox. Python has a massive collection of libraries, both native and third-party libraries. With so many Python libraries out there, though, it’s no surprise that some don’t get all the attention they deserve. Plus, programmers who work exclusively in one domain don’t always know about the goodies available to them for other kinds of work.

Python libraries are extensively used in simplifying everything from file system access, database programming, and working with cloud services to building lightweight web apps, creating GUIs, and working with images, ebooks, and Word files—and much more.

Multiprocessing Architecture

Multiprocessing refers to the ability of a system to support more than one processor at the same time. Applications in a multiprocessing system are broken to smaller routines that run independently. The operating system allocates these threads to the processors improving performance of the system. As a Python-Developer one should definitely know about the MVC (Model View Controller) and MVT (Model View Template) Architecture. Once you understand the Multi-Processing Architecture you can solve issues related to the core framework etc.

Communication Skills

In best software development firms the teams are made out of amazing programmers which work together to achieve the final goal – no matter if it means to finish the project, to create a new app or maybe to help a startup. However, working in a team means that a developer has to communicate well – not only to get the stuff done but also to keep the documentation clear so others can easily read and follow the thinking path to fully understand the idea.

Conclusion

In this write-up, we have elaborated on the top skills one needs to have to be a successful Python Developer. One must have a working knowledge of Core Python and a good grasp of Web Frameworks, Front-End Technologies, Data Science, Machine Learning and AI, Python Libraries, Multi-Process Architecture and Communication skills. Though there are a few more skills not listed in this blog, one can achieve success in developing large software applications by mastering all the above skills only.

As delineated in the article, Python is the new rage in the computing world. And it is no surprise then that more and more professionals are opting to take up courses teaching Machine learning using Python and python for data analysis.

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.