Of late, machines have achieved somewhat human-like intelligence and accuracy. The deep learning revolution has ushered us into a new era of machine learning tools and systems that perfectly identifies the patterns and predicts future outcomes better than human domain experts. Yet, there exists a critical distinction between man and machines. The difference lies in the way we reason – we, humans like to reason through advanced semantic abstractions, while machines blindly depend on statistics.

The learning process of human beings is intense and in-depth. We prefer to connect the patterns we identify to high order semantic abstractions and our adequate knowledge base helps us evaluate the reason behind such patterns and determine the ones that are most likely to represent our actionable insights.

On the other hand, machines blindly look for powerful signals in a pool of data. Lacking any background knowledge or real-life experiences, deep learning algorithms fail to distinguish between relevant and specious indicators. In fact, they purely encode the challenges according to statistics, instead of applying semantics.

This is why diverse data training is high on significance. It makes sure the machines witness an array of counterexamples so that the specious patterns get automatically cancelled out. Also, segmenting images into objects and practicing recognition at the object level is the order of the day. But of course, current deep learning systems are too easy to fool and exceedingly brittle, despite being powerful and highly efficient. They are always on a lookout for correlations in data instead of finding meaning.

Are you interested in deep learning? Delhi is home to a good number of decent deep learning training institutes. Just find a suitable and start learning!

How to Fix?

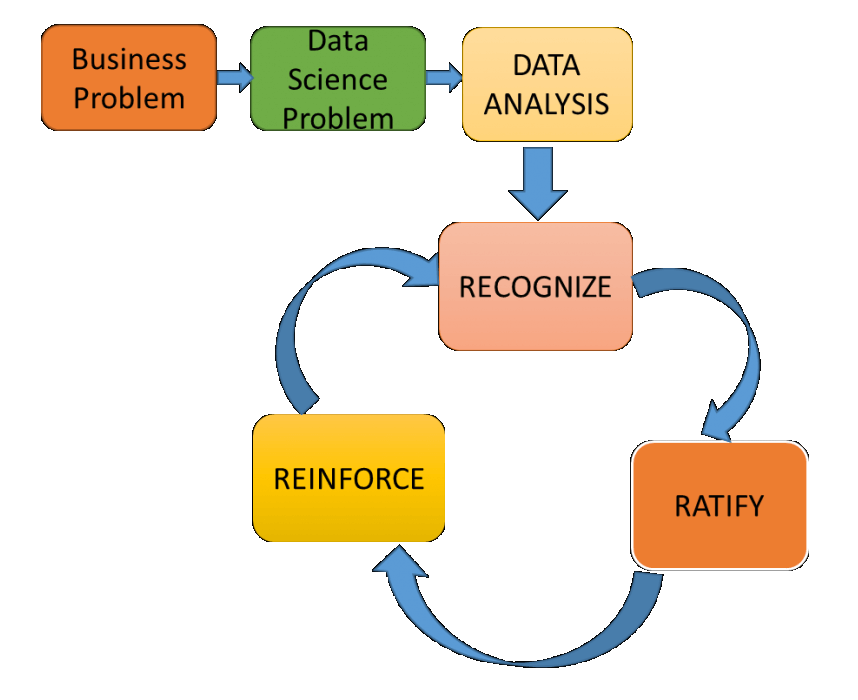

The best way is to design powerful machine learning systems that can tersely describe the patterns they examine so that a human domain expert can later review them and cast their approval for each pattern. This kind of approach would enhance the efficiency of pattern recognition of the machines. The substantial knowledge of humans coupled with the power of machines is a game changer.

Conversely, one of the key reasons that made machine learning so fetching as compared to human intelligence is its quaint ability to identify a range of weird patterns that would look spurious to human beings but which are actually genuine signals worth considering. This holds true especially in theory-driven domains, such as population-scale human behavior where observational data is very less or mostly unavailable. In situations like this, having humans analyze the patterns put together by machines would be of no use.

End Notes

As closing thoughts, we would like to share that machine learning initiated a renaissance in which deep learning technologies have tapped into unconventional tasks like computer vision and leveraged superhuman precision in an increasing number of fields. And surely we are happy about this.

However, on a wider scale, we have to accept the brittleness of the technology in question. The main problem of today’s machine learning algorithms is that they merely learn the statistical patterns within data without putting brains into them. Once, deep learning solutions start stressing on semantics rather than statistics and incorporate external background knowledge to boost decision making – we can finally chop off the failures of the present generation AI.

Artificial Intelligence is the new kid on the block. Get enrolled in an artificial intelligence course in Delhi and kickstart a career of dreams! For help, reach us at DexLab Analytics.

The blog has been sourced from ― www.forbes.com/sites/kalevleetaru/2019/01/15/why-machine-learning-needs-semantics-not-just-statistics/#789ffe277b5c

Interested in a career in Data Analyst?

To learn more about Data Analyst with Advanced excel course – Enrol Now.

To learn more about Data Analyst with R Course – Enrol Now.

To learn more about Big Data Course – Enrol Now.To learn more about Machine Learning Using Python and Spark – Enrol Now.

To learn more about Data Analyst with SAS Course – Enrol Now.

To learn more about Data Analyst with Apache Spark Course – Enrol Now.

To learn more about Data Analyst with Market Risk Analytics and Modelling Course – Enrol Now.